Qwen3 : A New Era of AI

A New Era of Artificial Intelligence

Qwen3 is the latest generation of the Qwen large language model series, marking a significant leap in natural language processing and multimodal AI capabilities. Building on the successes of previous versions, Qwen3 is equipped with larger training datasets, advanced architecture, and optimized fine-tuning techniques. This enables superior performance in complex reasoning, deep language understanding, and high-quality text generation.

What sets Qwen3 apart is its seamless transition between “thinking mode” and “non-thinking mode.” This dual-mode approach allows the model to excel across a wide range of tasks, from advanced logical reasoning, mathematical calculations, and coding tasks to casual conversations and creative writing.

Qwen3 also stands out with its robust agent capabilities. In both modes, it interacts with external tools, achieving top performance in complex agent-based workflows and multi-step tasks among open-source models.

Key Features of Qwen3:

- Supports over 100 languages and dialects.

- Optimizes performance across diverse tasks by switching between “thinking mode” (complex reasoning, math, coding) and “non-thinking mode” (efficient, general-purpose conversation) within a single model.

- Advanced agent capabilities enable interaction with external tools in both modes, producing top results in agent-focused tasks among open-source models.

Hybrid Thinking Modes

Qwen3 models support two distinct modes for problem-solving:

In Thinking Mode, the model performs step-by-step logical reasoning before providing an answer. It is ideal for complex problems and detailed analysis but takes longer due to the reasoning process.

In Non-Thinking Mode, the model delivers quick and direct answers. It is suitable for straightforward, surface-level questions.

Users can adjust how much “thinking” is required for a task, enabling both cost efficiency and high-quality responses.

Models

- Qwen3-235B-A22B: Competes with leading models such as DeepSeek-R1, o1, o3-mini, Grok-3, and Gemini-2.5-Pro in benchmarks for coding, mathematics, and general abilities.

- Qwen3-30B-A3B (MoE Model): Surpasses QwQ-32B despite having only one-tenth of the active parameters.

- Qwen3-4B: Matches the performance of the much larger Qwen2.5-72B-Instruct.

What is an MoE (Mixture of Experts) Model ?

Mixture of Experts (MoE) is an architectural approach designed to make massive neural networks more efficient. The core idea is to activate only specific parts of the network (experts) specialized in a given task, rather than using the entire network for every task.

In a traditional model, every layer processes data with all its parameters. In contrast, MoE models activate only certain expert sub-networks for a task, reducing computational load and enabling specialization for different tasks.

Input data is analyzed by a router mechanism , which selects the most suitable expert(s) for the given input. These selected experts process the input, while the others remain idle. The outputs are then combined to produce the final result.

Typically, 2 or 4 experts are activated for each input. This allows a model with millions of parameters to operate efficiently by using only the necessary parts.

For example, consider asking an AI model:

“What is the shortest way to reverse a list in Python?”

Behind this seemingly simple question, the Mixture of Experts (MoE) architecture comes into play. The router component within the model analyzes the question to determine its context. Recognizing it as a coding problem, the router activates experts such as:

Expert #12 – Python specialist

Expert #35 – Specialist in algorithms and data structures

Only these 2-3 experts are activated, while the remaining 97 experts in a 100-expert model remain idle. This avoids unnecessary computation, saving both energy and time.

Pre-training

Qwen3 has been trained on a significantly expanded dataset compared to Qwen2.5. While Qwen2.5 was trained on 18 trillion tokens, Qwen3 utilized approximately 36 trillion tokens. This dataset encompasses 119 languages and dialects.

The training phase consists of three processes:

Phase 1 (S1): Builds foundational language skills and general knowledge using over 30 trillion tokens with a sequence length of 4K tokens.

Phase 2 (S2): Adds 5 trillion more tokens, focusing on STEM topics, coding problems, and reasoning tasks by increasing the proportion of such data.

Final Phase: Expands the model’s context window with high-quality data using a sequence length of 32K tokens.

Post-training

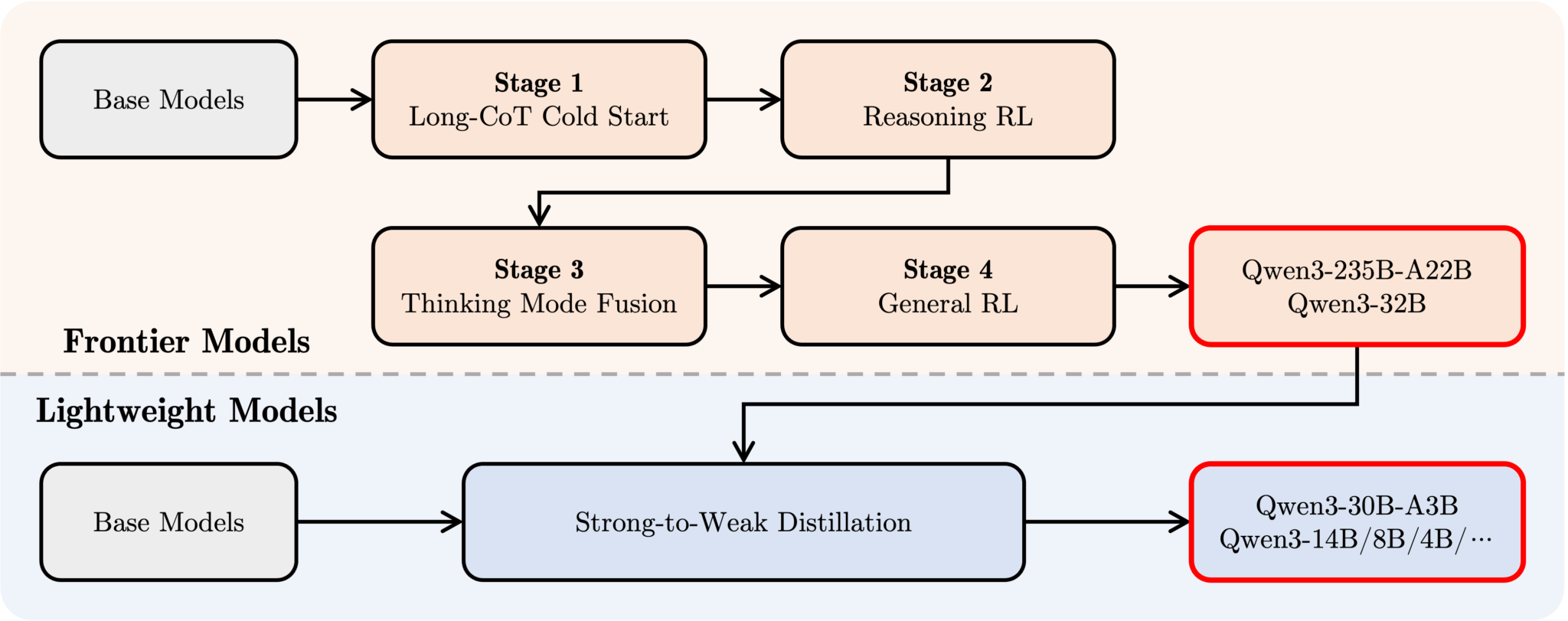

Stage 1: Long-CoT Cold Start

The model is trained with data containing long chains of thought (CoT) in subjects like mathematics, coding, logical reasoning, and science (STEM). This establishes the foundation for the model’s step-by-step reasoning capabilities.

This stage is similar to building a “thinking engine” within the model.

Example Question:

“There are 3 coops on a farm with 12, 15, and 9 chickens respectively. How many chickens are there in total?”Chain of Thought:

1. The first coop has 12 chickens.

2. The second coop has 15 chickens.

3. The third coop has 9 chickens.

4. 12 + 15 = 27

5. 27 + 9 = 36

6. There are a total of 36 chickens.

At the end of this stage, the model now “knows how to think.” It not only answers questions but also explains why it provides those answers.

Stage 2: Reasoning RL

It is not enough for the model to simply form a chain of thought — it must do so efficiently, accurately, and effectively. In this stage, the model learns to adopt good reasoning paths and discard poor logical chains during step-by-step reasoning.

A “reward” mechanism is introduced in Reasoning RL: Correct reasoning earns a reward, while incorrect reasoning results in a penalty (or lower score). The model begins to learn reasoning paths that yield higher rewards.

Example Question:

“What is (8 + 5) × 2?”

Poor Answer

8 + (5 × 2) = 18 ➔ Incorrect order of operations (low reward)

Good Answer:

1. 8 + 5 = 13

2. 13 × 2 = 26 ➔ Correct order of operations (high reward)

The model learns to prefer reasoning paths that earn higher rewards, thereby improving its internal logic.

Stage 3: Thinking Mode Fusion

This stage is the most critical step that makes the Qwen3 model truly hybrid — combining both deep thinking and quick response modes within the same structure.The “deep thinking model” from Stage 2 serves as the foundation. This model is fine-tuned with standard instruction-tuning data (i.e., data containing short, quick responses).

Additionally, regenerated data (high-quality examples produced by Stage 2 itself) is used in this process. As a result, the model recognizes two distinct response styles and selects the appropriate one based on the situation.

Situations Requiring Deep Reasoning:

“A worker completes a task in 6 days. How many days would it take for 3 workers to complete the same task together?”

Solution Steps:

1. 1 worker completes the task in 6 days → Total work = 6 worker-days

2. If 3 workers work together → Each day, 3 workers contribute

3. 6 worker-days / 3 workers/day = 2 daysSituations Requiring Quick Responses:

“In which year was Alibaba Group founded?” → 1999

Stage 4: General RL

The goal is to make the model not only capable of reasoning and quick responses but also behaviorally balanced, safe, and effective in general tasks. This stage ensures that Qwen3 is not just “intelligent” but also “useful.”

It includes example tasks such as instruction following, format compliance, agent behaviors, and suppressing harmful/unwanted behaviors.

Example:

“Create a 3-item to-do list for me.”

– If it provides a long, unnecessary explanation → Low reward

– If it gives a clear and instruction-compliant response → High reward

At the end of this 4-stage training process, the resulting models (e.g., Qwen3-235B-A22B, Qwen3-32B) become hybrid AI systems that are both technically powerful and behaviorally balanced, excelling in tasks with high performance.

Strong-to-Weak Distillation

Large models (e.g., Qwen3-235B) are extremely powerful but require high-end hardware and significant resources to operate, making them impractical for every user. Therefore, the goal is to transfer the knowledge and capabilities of these models to smaller models. This knowledge transfer process is called distillation, and since knowledge is transferred from a strong model to a weak model, this specific method is referred to as strong-to-weak distillation.

Process:

1.Teacher Student Relationship: The large model (Qwen3-235B-A22B) transfers knowledge to the smaller model (Qwen3-14B).

2. Imitation Process: The smaller model is trained by imitating the responses of the larger model.

Outcome: While the 14B model is not as powerful as the 235B model, it becomes “trained” under its guidance.

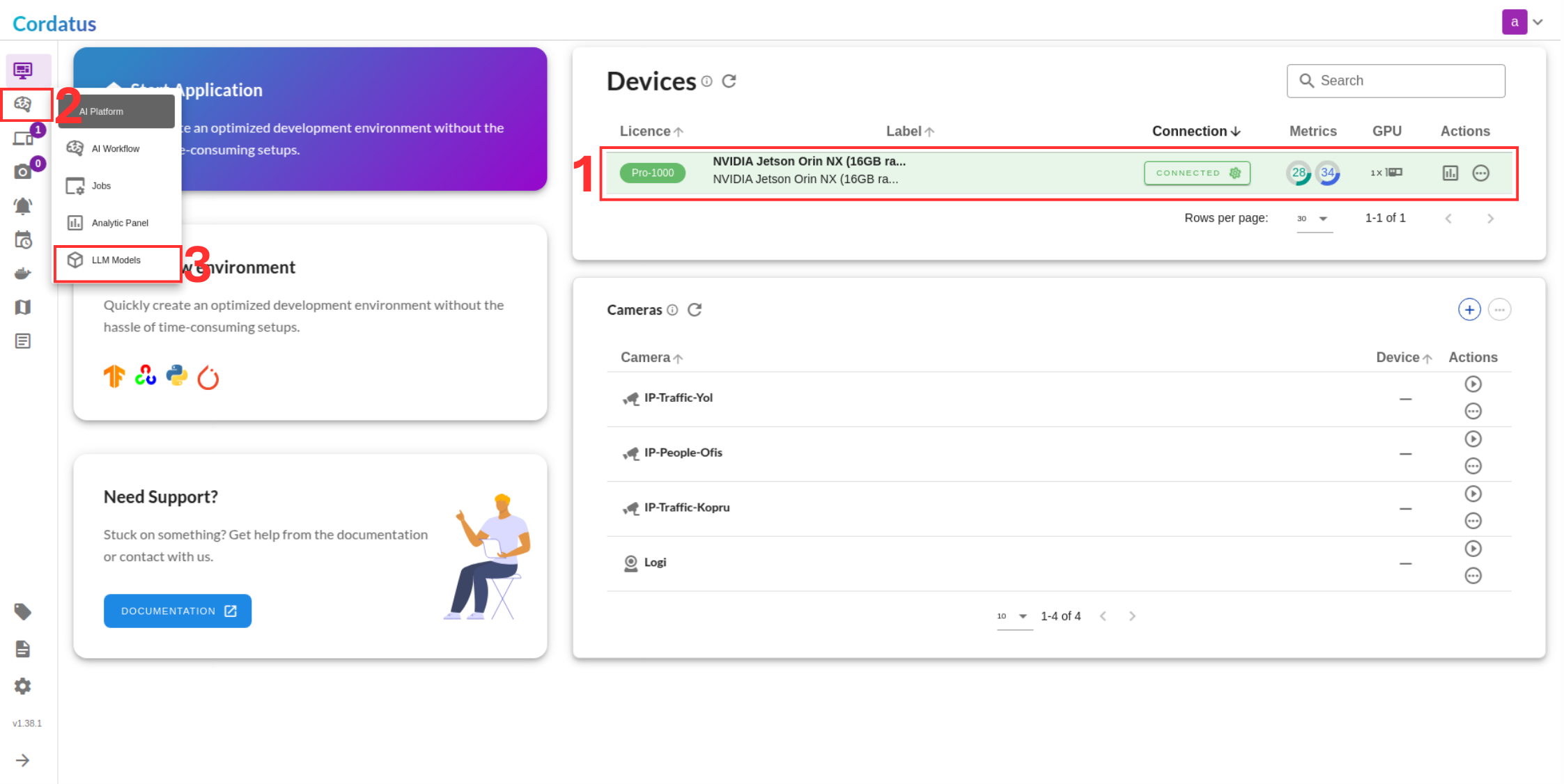

How To Run Qwen3 On Cordatus ?

Launching the Ollama service via Cordatus is typically done through the Applications menu:

Method 1: Model Selection Menu

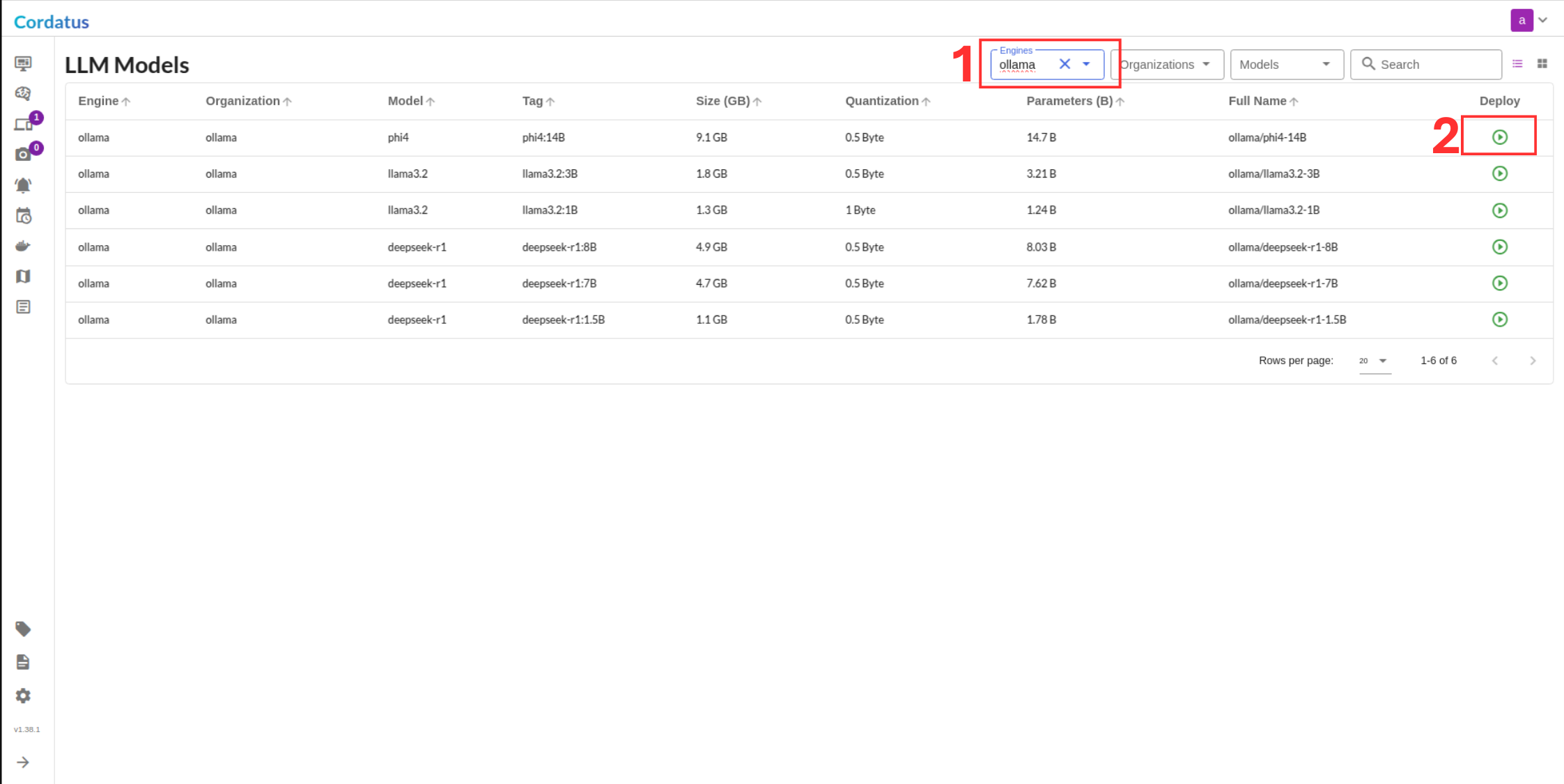

1. Connect to your device and select LLM Models from the sidebar.

2. Select ollama from the model selector menu (Box1), choose your desired model, and click the Run symbol (Box2).

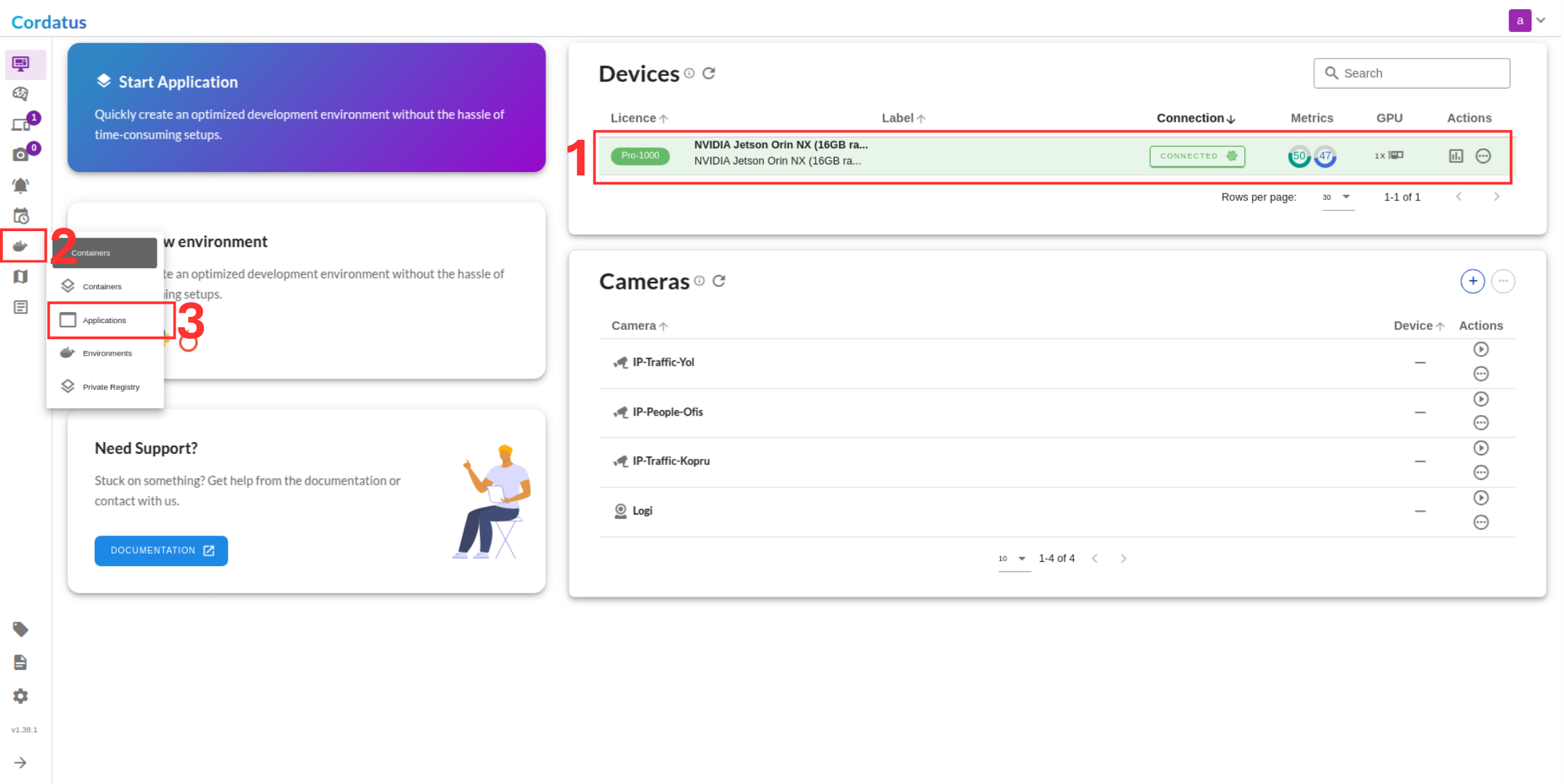

Method 2: Containers-Applications Menu

1. Connect to your device and select Containers-Applications from the side bar.

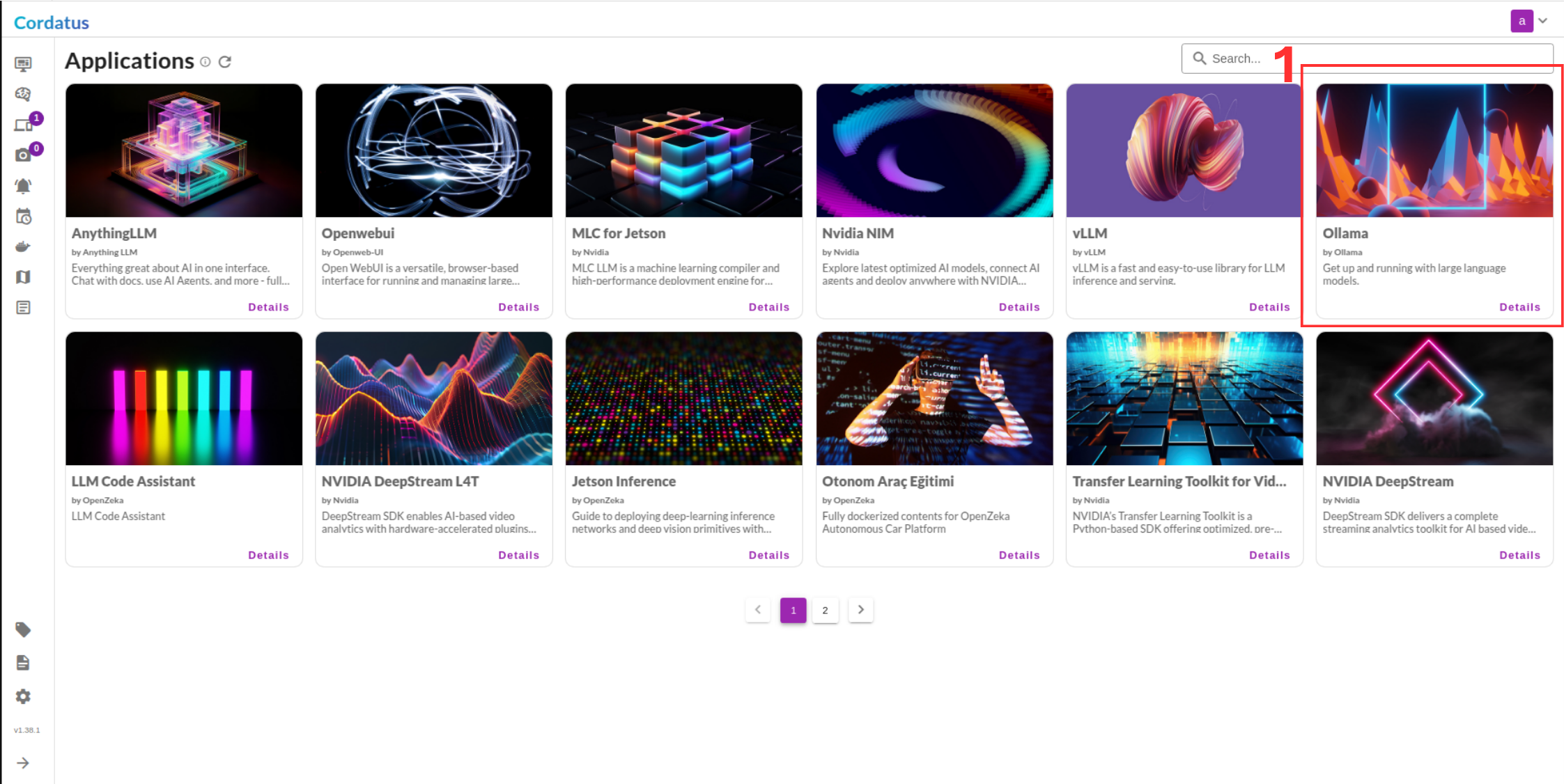

2. Select Ollama from the container list.

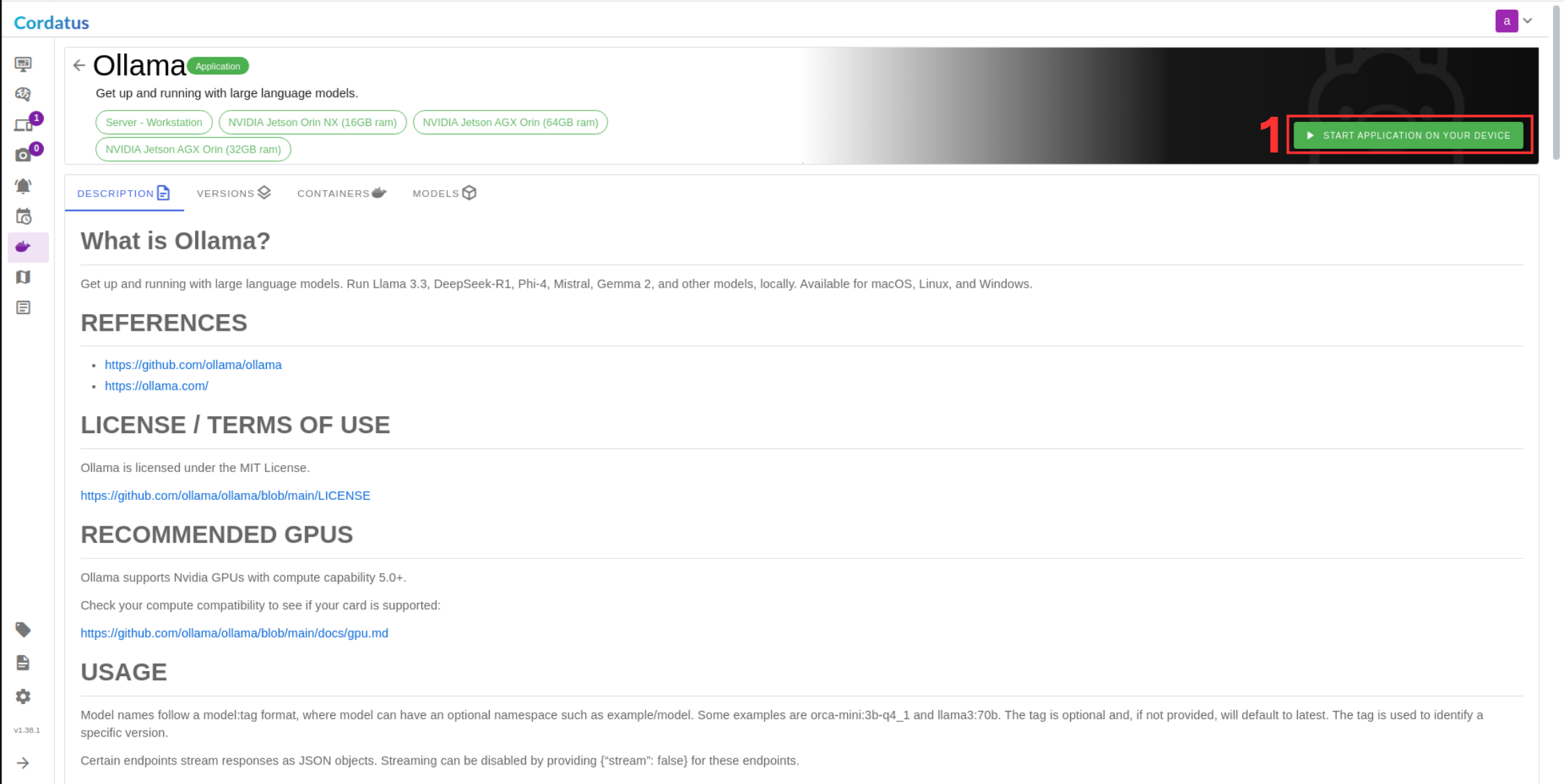

3. Click Run to start the model deployment.

Configuring and Running the Model

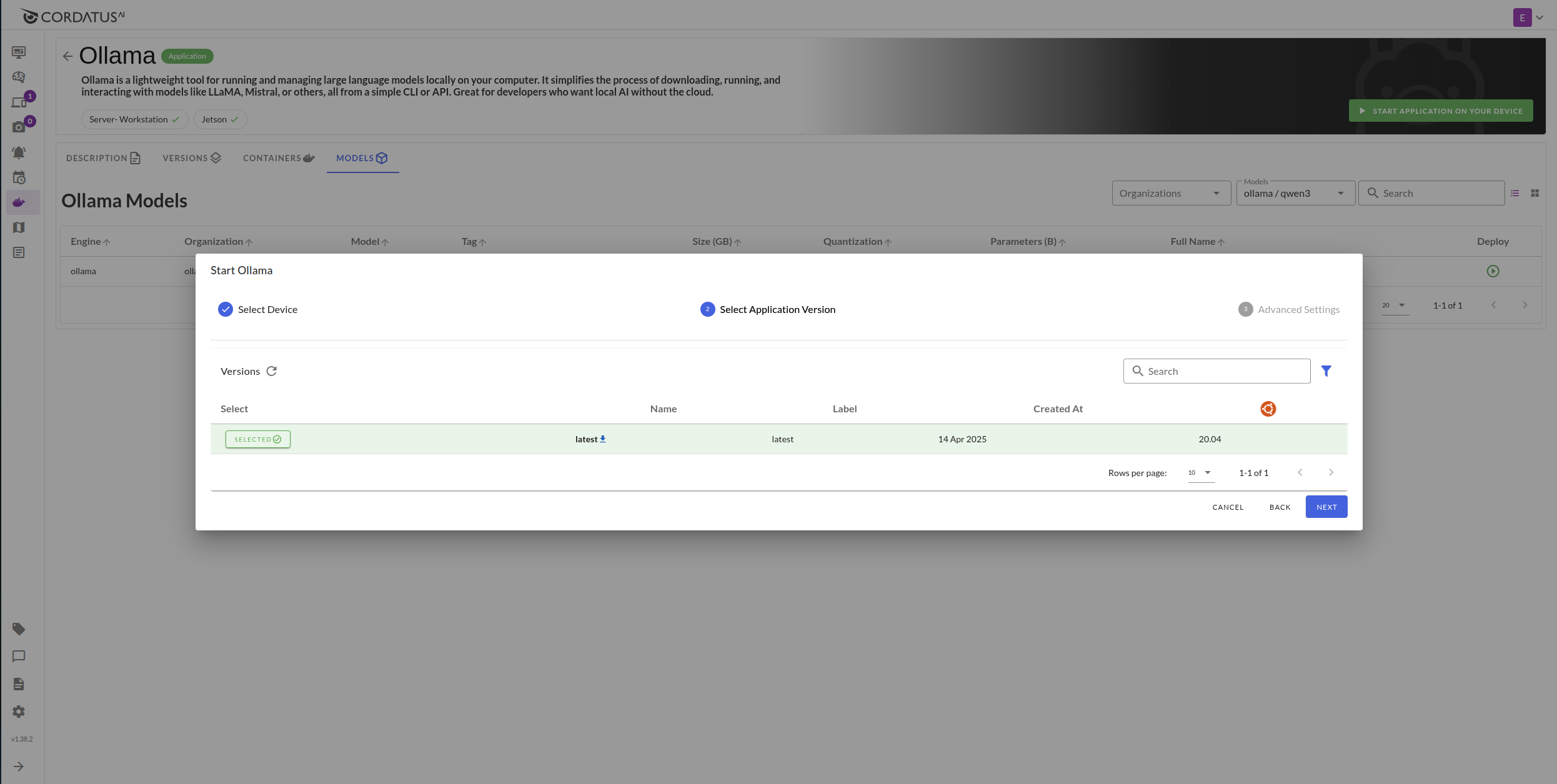

Once you have selected the model, follow these steps to complete the setup:

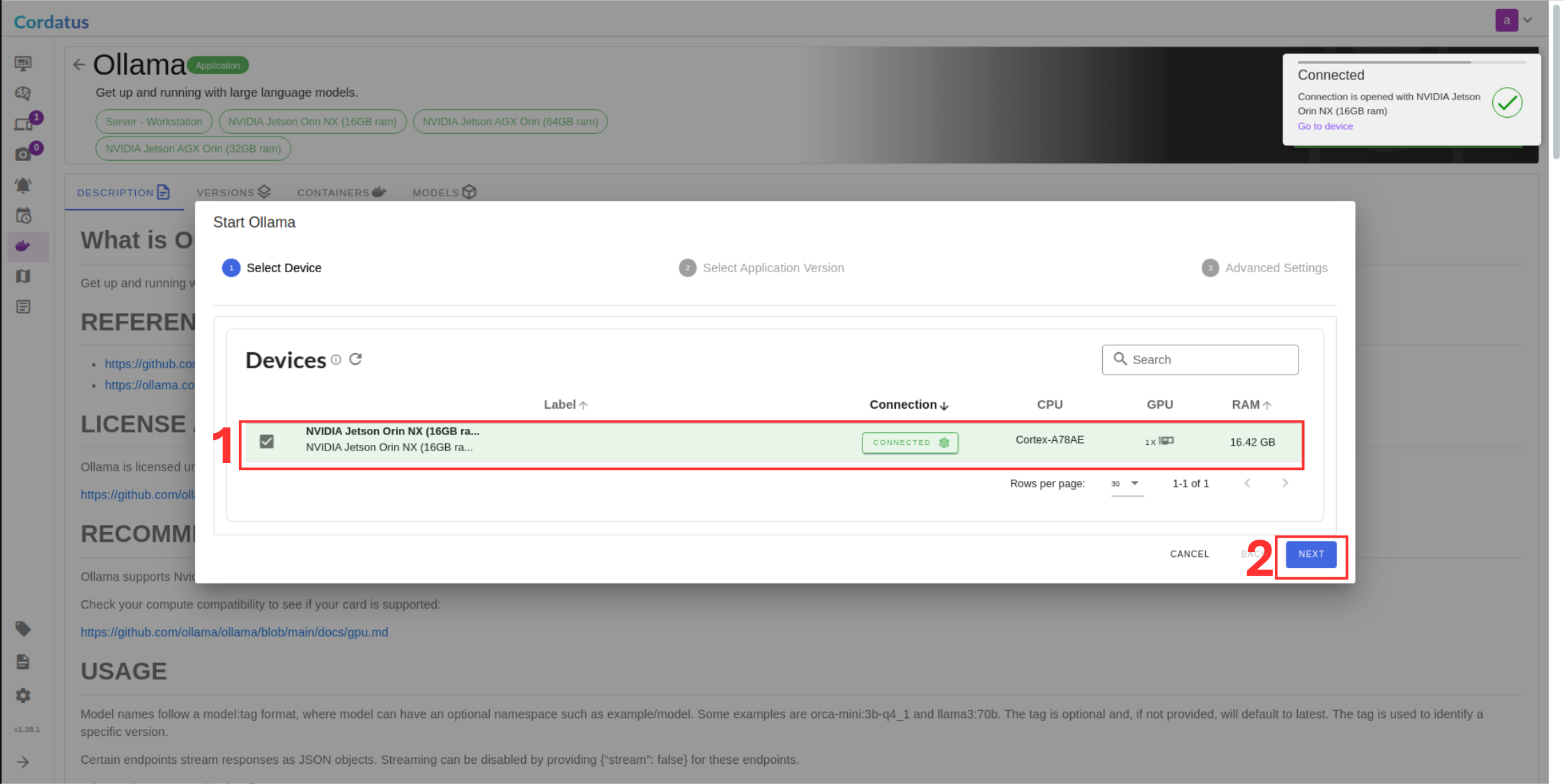

4. Confirm the device where the Ollama container will be deployed.

5. Choose the appropriate Ollama container image. Using the latest version is generally recommended.

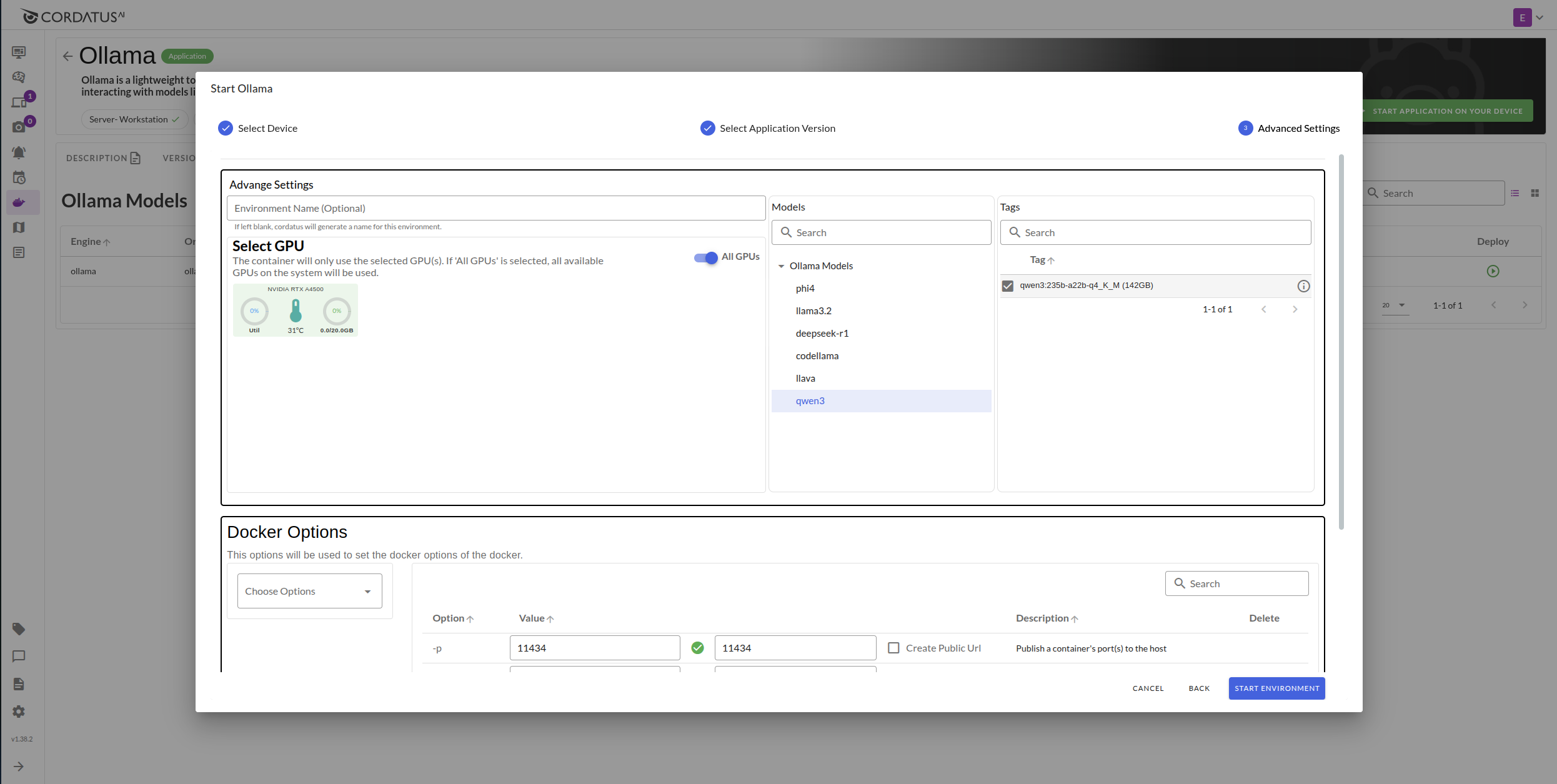

6. Select the desired model.

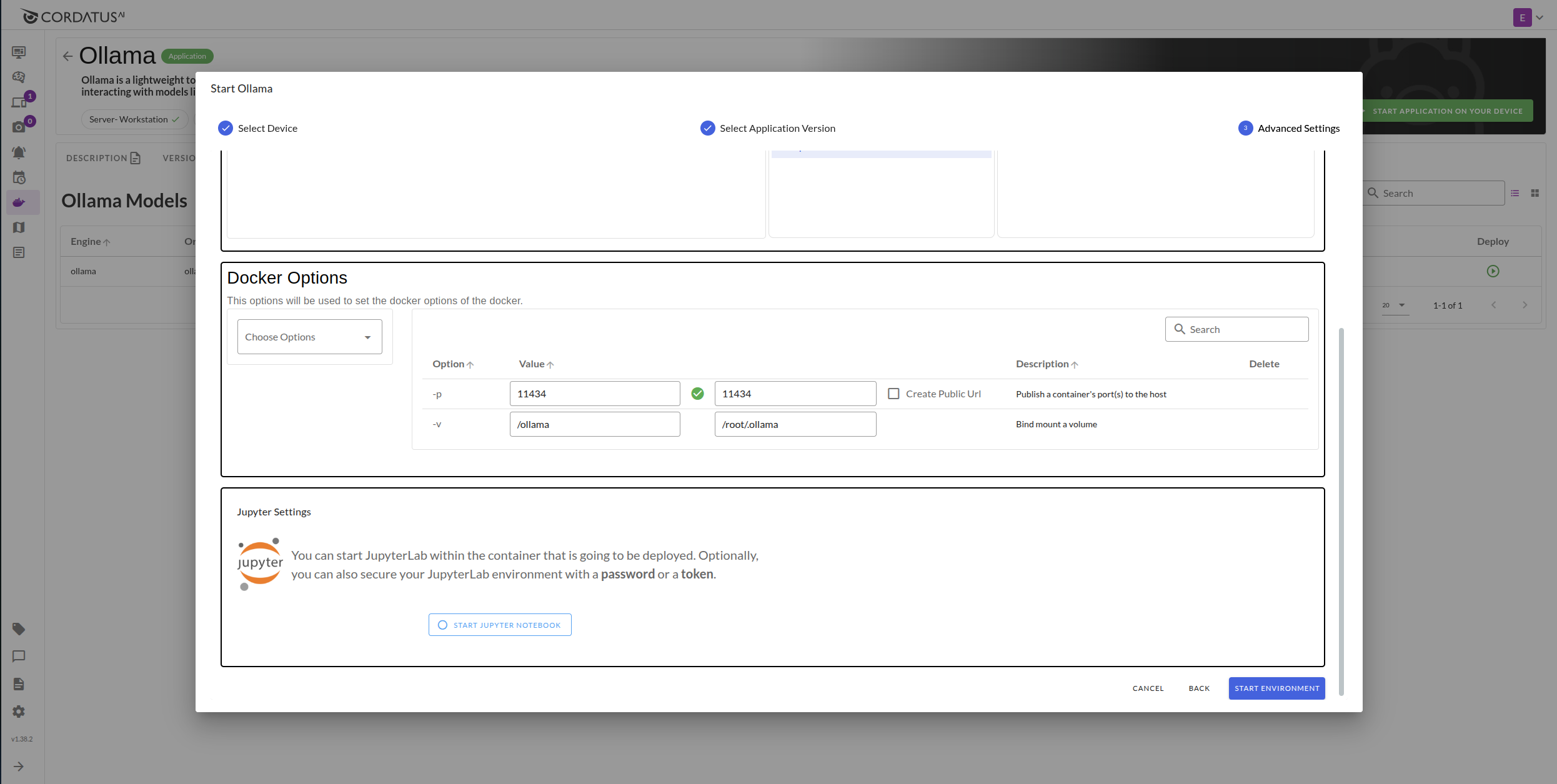

7. Verify the network port for the Ollama API. The default is typically `11434`. Ensure it shows as available . If not check for conflicts or adjust if necessary/possible within Cordatus settings.

8. Click Jupyter notebook to enable if you need.

9. Click Save Environment. This saves your configuration and starts the Ollama service container on your device.

Success! The Ollama service is now running locally, managed by Cordatus.

The model is running with: `ollama run qwen3` !