Llama-3.3-Nemotron-Super-49B-v1: Efficient, Scalable Reasoning for Enterprise AI

Llama-3.3-Nemotron-Super-49B-v1 is a powerful large language model (LLM) developed by NVIDIA and based on Meta’s Llama-3.3-70B-Instruct. Post-trained for reasoning, human-aligned dialogue, tool use, and RAG (Retrieval-Augmented Generation), it is a versatile model designed to solve complex, multi-step tasks with high reliability. It supports up to 128K tokens of context, making it ideal for long documents and extended conversations.

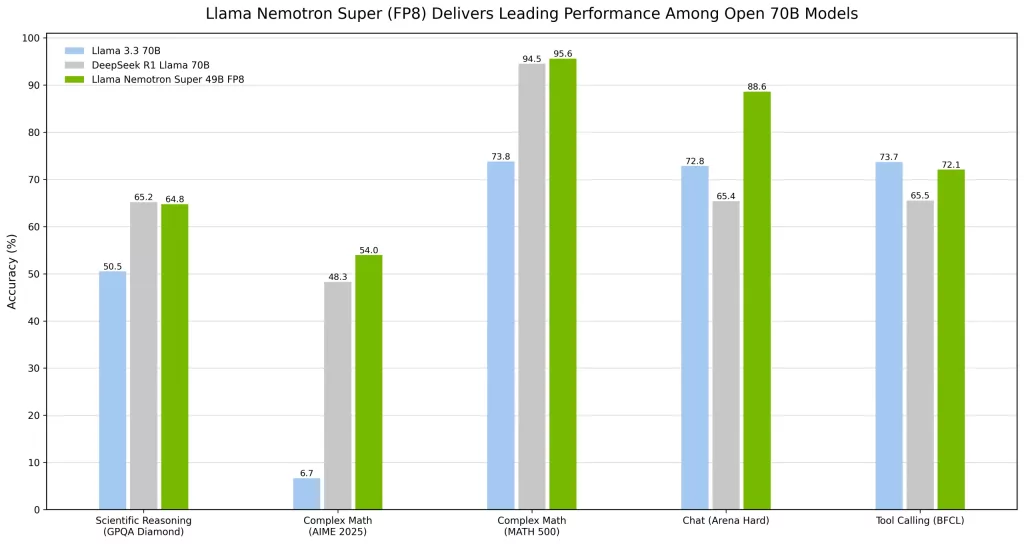

Built using Neural Architecture Search (NAS), the model is optimized to offer an exceptional balance between accuracy and efficiency. Thanks to its reduced memory footprint, the model can be run on a single H200 GPU, even under demanding workloads—making it highly cost-effective for enterprise-scale applications.

accuracy_plot-scaled

What Makes Llama-3.3-Nemotron-Super-49B-v1 Stand Out? Why Should You Use It?

-

-

High-Performance Reasoning: Trained to handle advanced logical tasks, step-by-step problem solving, and human-aligned conversations.

-

Neural Architecture Search (NAS) Optimized: Achieves a smarter balance of compute and accuracy, lowering infrastructure costs while maintaining performance.

-

FP8 Chat Model Variant: Available in an FP8 version optimized for general-purpose reasoning and dialogue, supporting multiple languages.

-

Multilingual Support: While best in English and code, the model also performs well in German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

-

Ideal for Real-World AI Systems: Built for RAG pipelines, tool-augmented AI agents, enterprise chat assistants, and more.

Whether you’re building domain-specific assistants, chatbots, or advanced AI reasoning systems, Nemotron Super 49B gives you the power of a giant model—optimized for efficiency and real-world use.

-