Qwen3-Coder: A Massive-Scale Model with Deep Context for Advanced Code Intelligence

What is Qwen3-Coder?

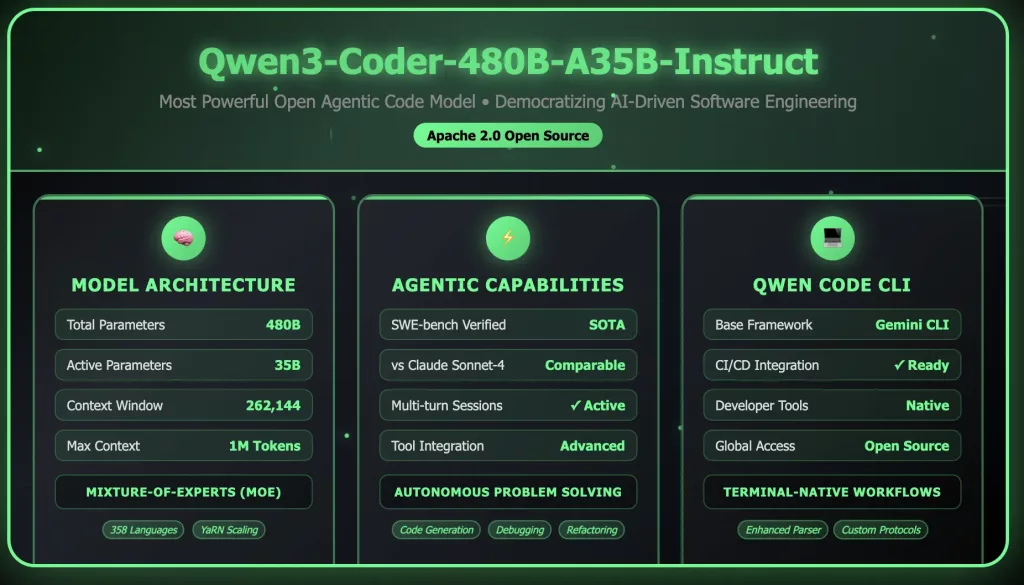

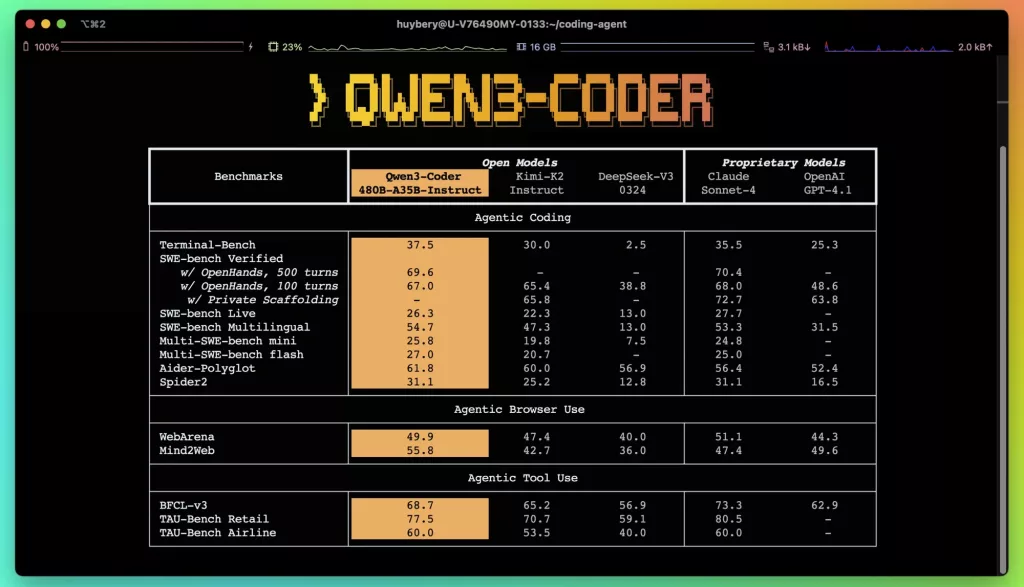

Qwen3-Coder-480B is a cutting-edge open-source AI coding model developed by Alibaba Cloud’s DAMO Academy. It features 480B parameters (35B active) and supports up to 256K context tokens (expandable to 1M). It matches Claude Sonnet 4 in performance while offering full transparency and local deployment.

Designed for agentic coding, Qwen3-Coder plans, executes, and refines complex workflows autonomously. It understands entire repositories—not just snippets—and builds complete, structured solutions like a human developer.

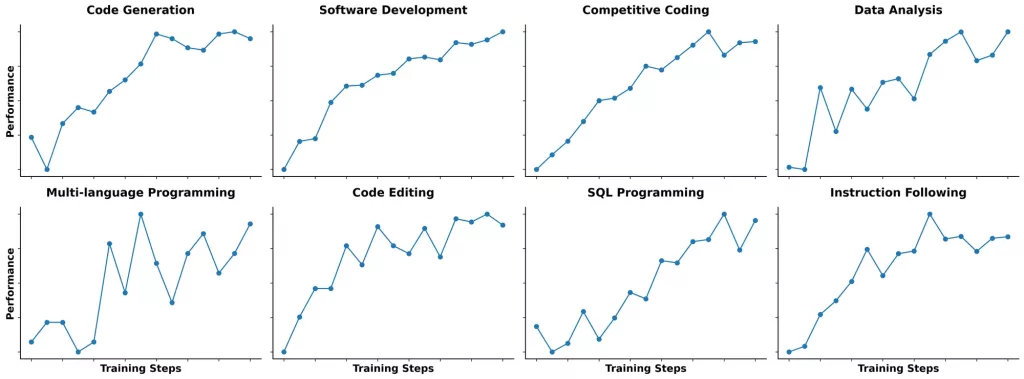

How Does Qwen3-Coder Work?

Qwen3-Coder operates through a sophisticated process that mimics human developer thinking:

- Context Analysis: It processes your entire codebase using its massive 256K token window, understanding project structure and dependencies.

- Task Planning: Breaks down complex problems into executable steps.

- Intelligent Code Generation: Mixture-of-Experts architecture selectively activates the most relevant knowledge for each task

- Self-Validation: Reviews and refines its own output, ensuring code quality and consistency.

Think of it as having access to 480B parameters of coding knowledge but intelligently using only what’s needed for each specific task.

What is Repository-Scale Understanding?

Repository-scale understanding is Qwen3-Coder’s standout feature that sets it apart from traditional coding assistants. Instead of working with isolated code snippets, it can comprehend entire software projects as cohesive systems.

This capability means Qwen3-Coder can:

- Understand how different modules interact across your entire codebase

- Maintain consistency in coding patterns and architectural decisions

Identify dependencies and potential conflicts before they become issues - Generate code that fits seamlessly into existing project structures

- The massive context window allows it to keep track of variable names, function definitions, and architectural patterns across thousands of files simultaneously.

Agentic Coding in Qwen3-Coder

Agentic coding represents a fundamental shift from traditional AI assistance to autonomous development capabilities. Qwen3-Coder is engineered specifically for what the industry is now calling “agentic AI coding”, which means it can:

- Plan and Execute: Break down complex development tasks into manageable steps

Self-Correct: Review and improve its own code output - Multi-Turn Interaction: Engage in extended conversations about code requirements

- Tool Integration: Use external tools and APIs as part of its development process

- Decision Making: Choose appropriate approaches based on project requirements and constraints

This autonomous capability reduces the need for constant developer oversight and can handle entire feature implementations from specification to testing.

What Makes Qwen3-Coder Stand Out? Why Should You Use It?

Repository-Scale Understanding: The massive 256K context window allows it to process entire codebases, understanding how different modules interact and maintaining consistency across your project.

Agentic Intelligence: Unlike simple code generators, Qwen3-Coder can break down complex tasks, make decisions, and execute multi-step workflows without constant guidance.

Mixture-of-Experts Architecture: Uses 480B parameters of knowledge but activates only 35B for each task, providing enterprise-level intelligence with efficient performance.

Conclusion

Qwen3-Coder represents a breakthrough in open-source AI coding technology. With repository-scale understanding, agentic capabilities, and performance matching Claude Sonnet 4, it offers a compelling alternative to expensive proprietary solutions.

The model’s ability to understand entire codebases and execute complex workflows makes it invaluable for modern development teams. While it requires significant resources, the open-source nature and superior performance justify the investment.

How to run Qwen3-Coder on Cordatus.ai ?

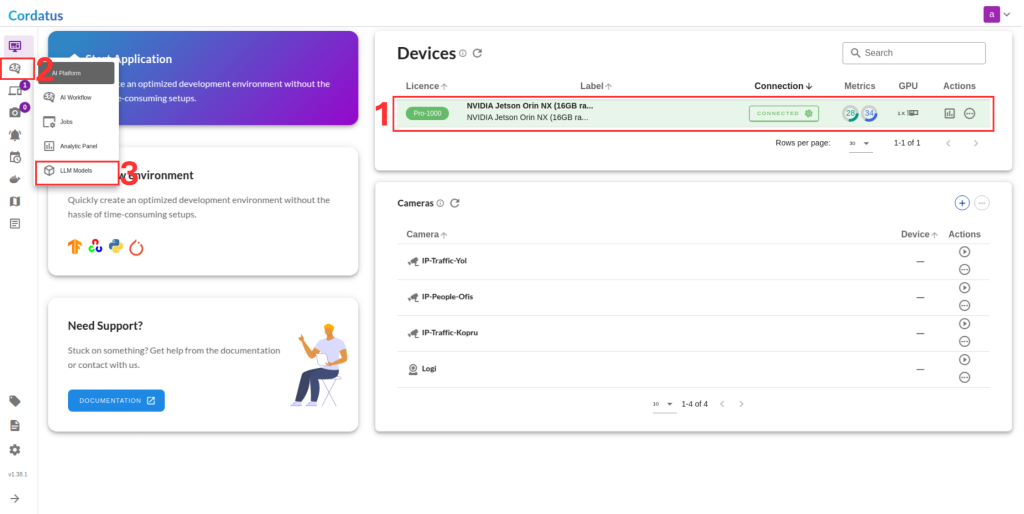

1. Connect to your device and select LLM Models from the sidebar.

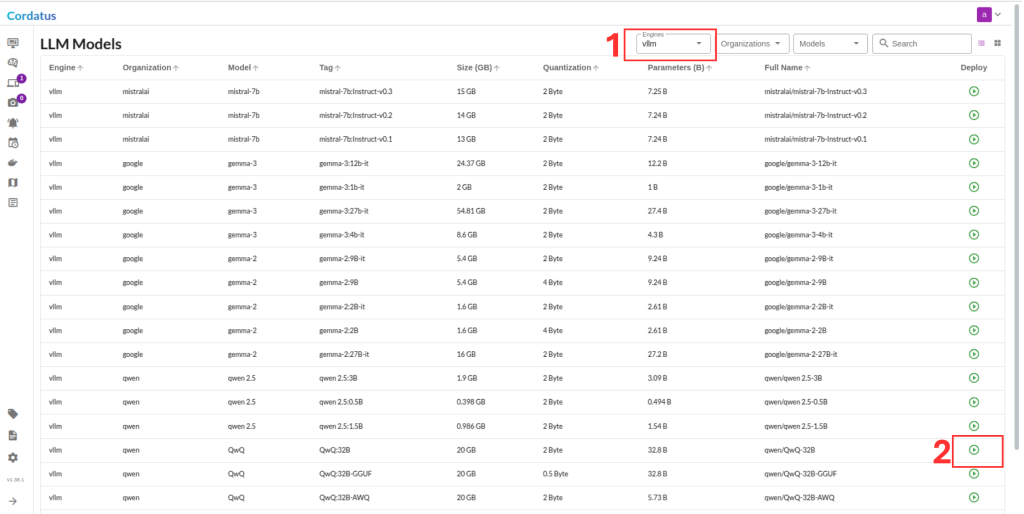

2. Select vLLM from the model selector menu (Box1), choose your desired model, and click the Run symbol (Box2).

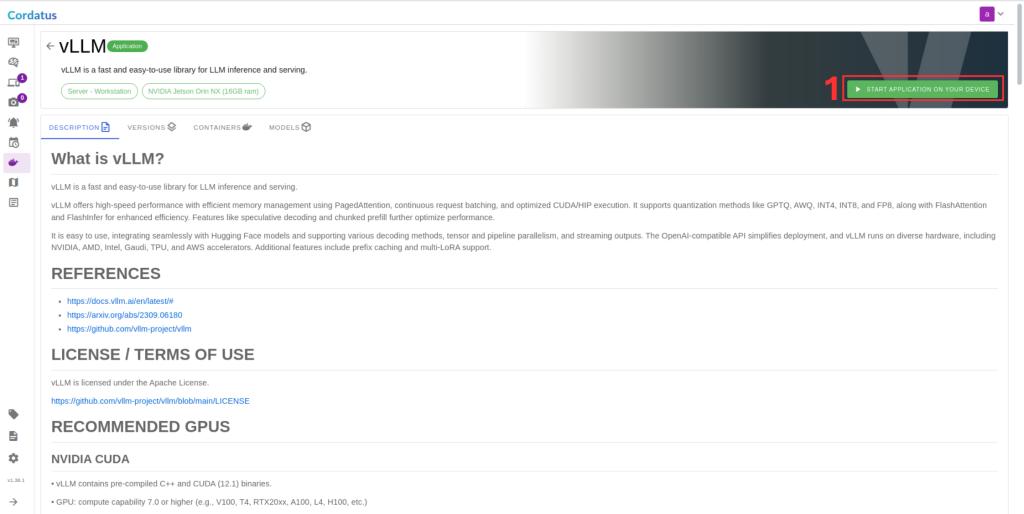

3. Click Run to start the model deployment.

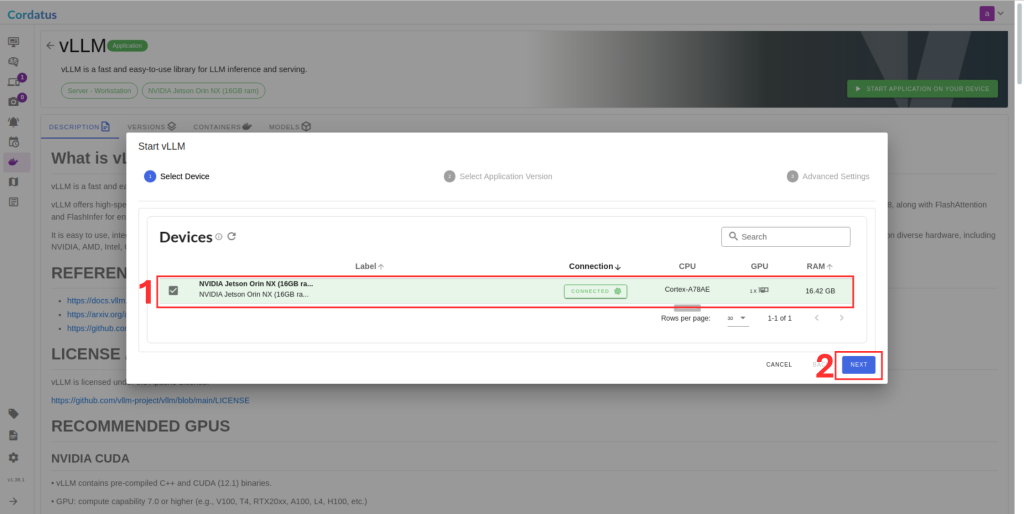

4. Select the target device where the LLM will run.

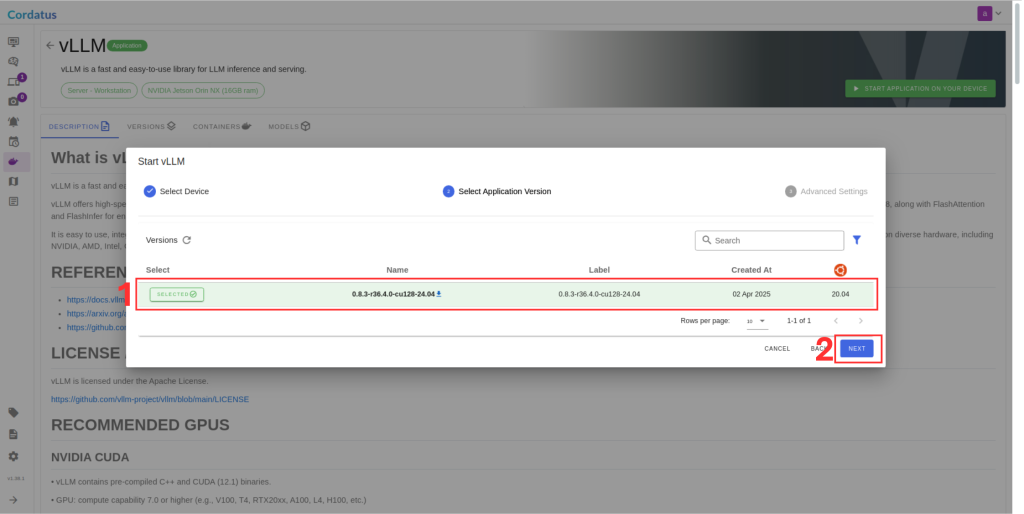

5. Choose the container version (if you have no idea select the latest).

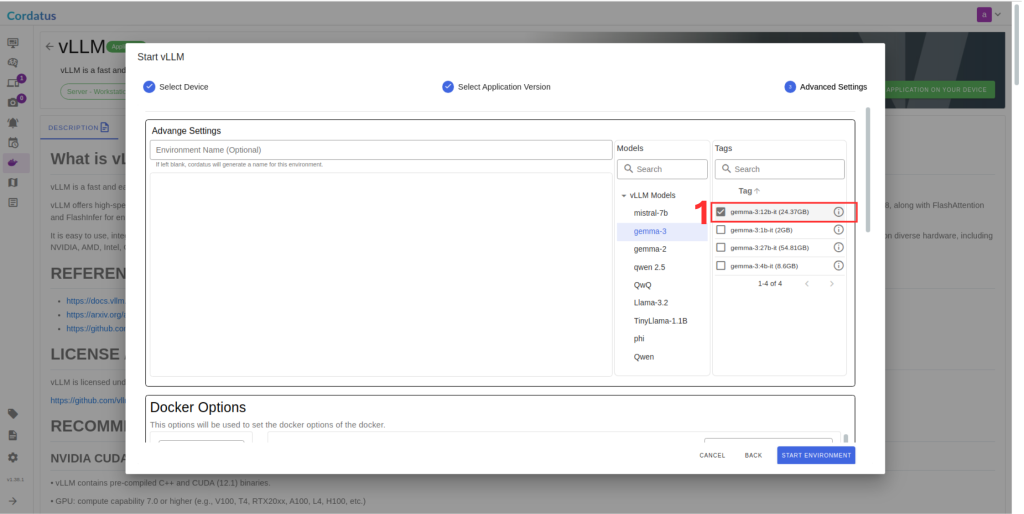

6. Ensure the correct model is selected in Box 1.

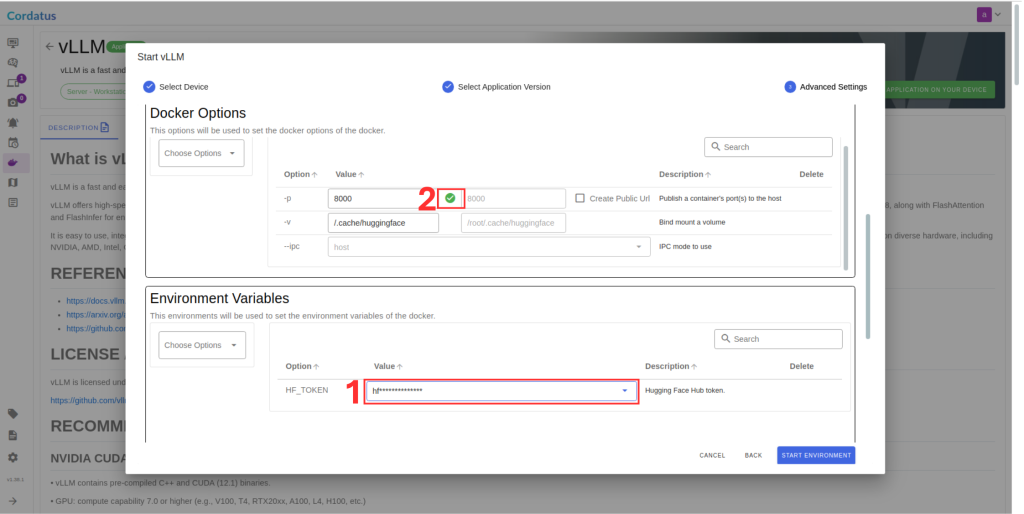

7. Set Hugging Face token in Box 1 if required by the model.

8. Click Save Environment to apply the settings.

Once these steps are completed, the model will run automatically, and you can access it through the assigned port.