GLM-4.1V-Thinking: The 9B Model That Beats 72B Giants

What is GLM-4.1V-Thinking?

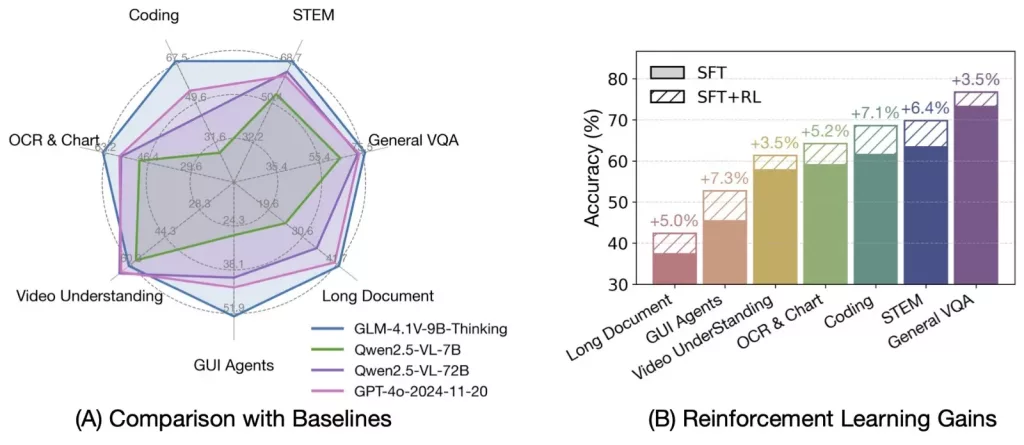

GLM-4.1V-Thinking is an open-source multimodal AI developed by Zhipu AI and Tsinghua University that processes both images and text simultaneously. With just 9 billion parameters, it consistently outperforms much larger models, including the 72-billion parameter Qwen2.5-VL-72B on numerous benchmarks.

The model excels across diverse tasks: solving complex STEM problems, understanding videos, navigating user interfaces, processing long documents, and generating code. Its “thinking” capability refers to transparent step-by-step reasoning that shows its work process.

How Does GLM-4.1V-Thinking Work?

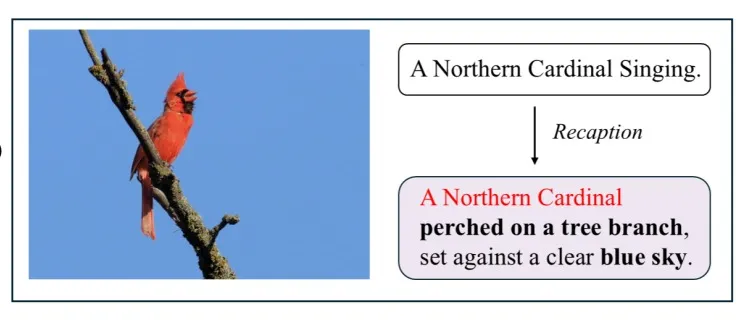

Example of recaption model results. The recaptioning process eliminates noise and hallucinated content from the original data, while fully retaining factual knowledge.

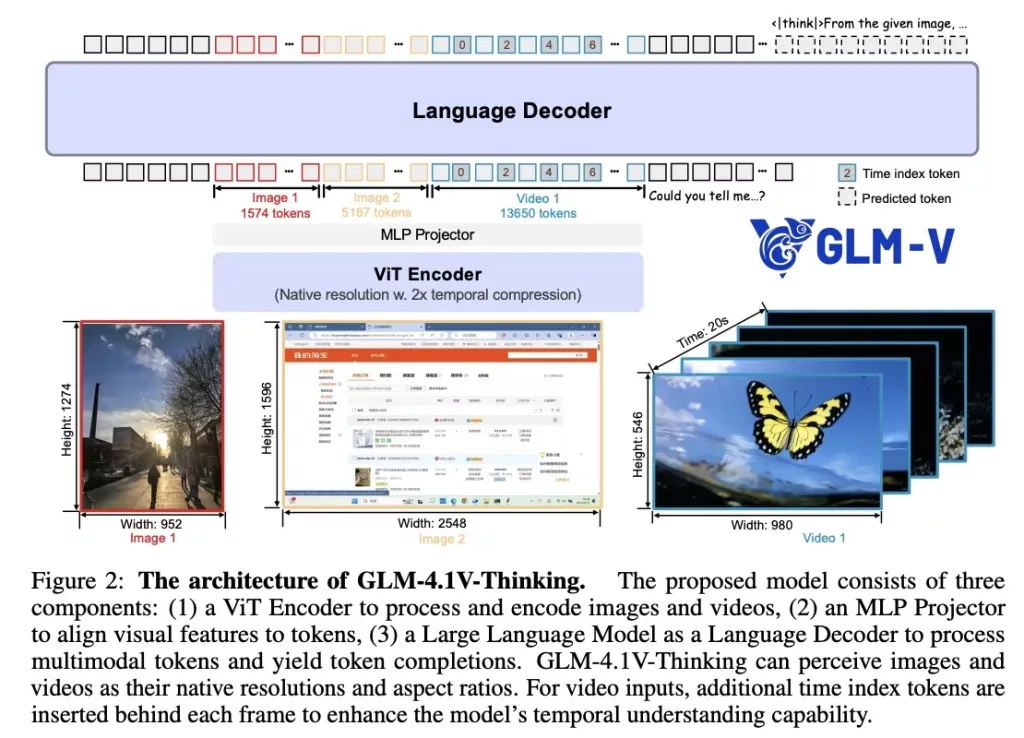

The model uses a three-component architecture designed for efficient multimodal reasoning:

Vision Encoder (ViT): Unlike standard models that resize images and lose quality, GLM-4.1V-Thinking processes images and videos at their native resolutions and aspect ratios. This preserves crucial visual details and handles extreme scenarios – from ultra-wide aspect ratios (over 200:1) to ultra-high resolution images (beyond 4K).

MLP Projector: Acts as an intelligent bridge converting visual features into tokens the language model can understand, enabling seamless integration between visual and textual information.

Language Decoder (GLM): The reasoning core that processes both visual tokens and text through a unique structured format using <think> and <answer> sections, making the model’s reasoning process completely transparent.

This architecture is specifically optimized for the three-stage training process: massive pre-training on 10 billion image-text pairs, supervised fine-tuning with structured reasoning, and the revolutionary RLCS method.

RLCS: Reinforcement Learning with Curriculum Sampling

GLM-4.1V-Thinking’s breakthrough innovation is RLCS – a revolutionary training method unique to this model. Unlike traditional training that feeds random examples, RLCS acts like a personalized AI tutor, continuously identifying the optimal difficulty level where maximum learning occurs.

The system dynamically adjusts training challenges as the model evolves, automatically introducing harder problems once easier concepts are mastered. This delivered performance gains up to 7.3% across various tasks – a technique no other major model currently uses.

What Makes GLM-4.1V-Thinking Stand Out? Why Should You Use It?

Unprecedented Efficiency: Outperforms Qwen2.5-VL-72B (8x larger) on 18 out of 28 tasks and even surpasses GPT-4o on challenging benchmarks like MMStar and MathVista.

Complete Open Source Access: Unlike proprietary models, you get full model architecture, training code, and deployment freedom without API costs or licensing restrictions.

Transparent Reasoning: Shows its thinking process step-by-step, making it ideal for education and scenarios requiring explainability.

Native Resolution Processing: Handles images at original quality and extreme aspect ratios (over 200:1) without resizing, preserving crucial visual details other models lose.

Cross-Domain Learning: Training improvements in one area boost performance in others, suggesting deep underlying reasoning abilities that transfer across different types of problems.

Conclusion

GLM-4.1V-Thinking proves that smarter training methods triumph over brute-force scaling. Its open-source availability, exceptional cross-domain performance, and innovative RLCS training make it a game-changer for researchers and developers seeking efficient AI solutions.

The future of AI isn’t about bigger models – it’s about smarter ones. GLM-4.1V-Thinking is leading that future.

How to run GLM-4.1V-Thinking on Cordatus.ai ?

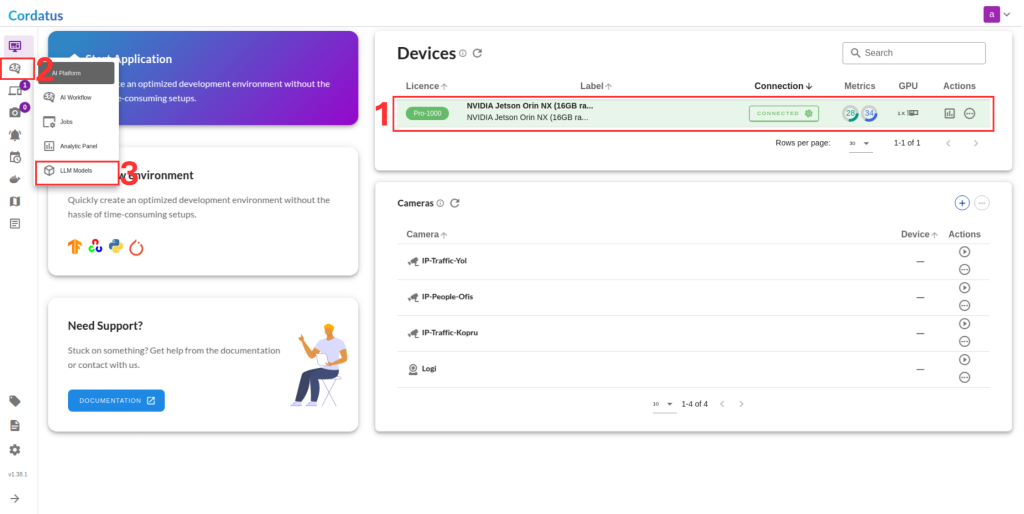

1. Connect to your device and select LLM Models from the sidebar.

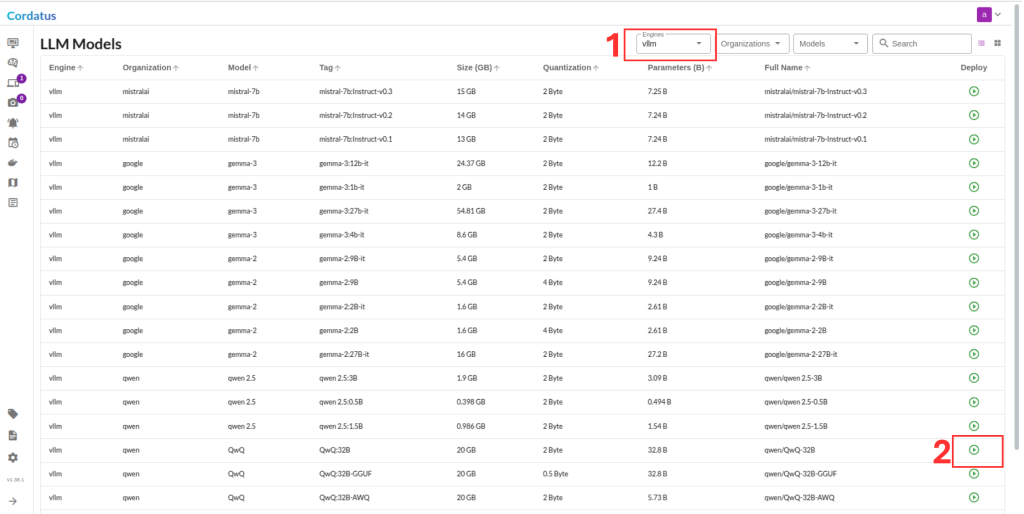

2. Select vLLM from the model selector menu (Box1), choose your desired model, and click the Run symbol (Box2).

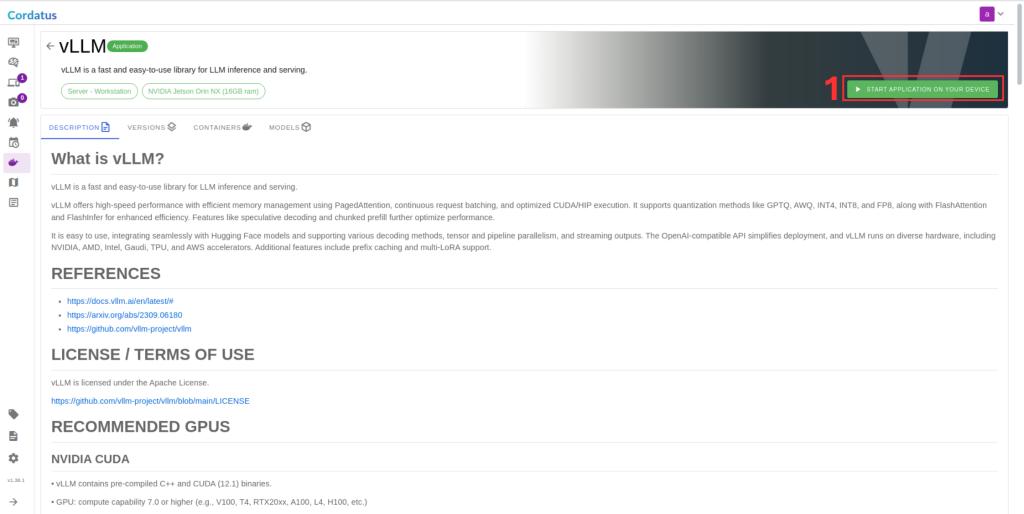

3. Click Run to start the model deployment.

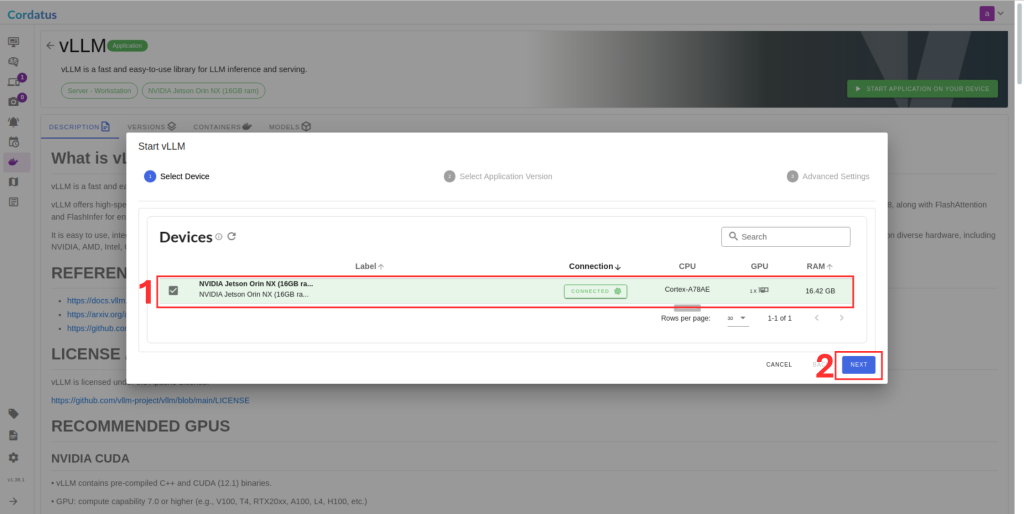

4. Select the target device where the LLM will run.

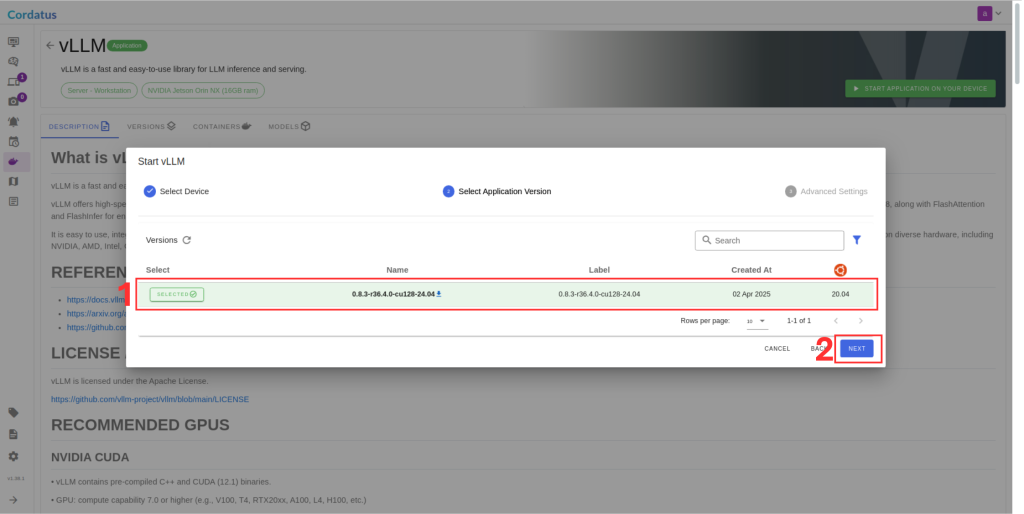

5. Choose the container version (if you have no idea select the latest).

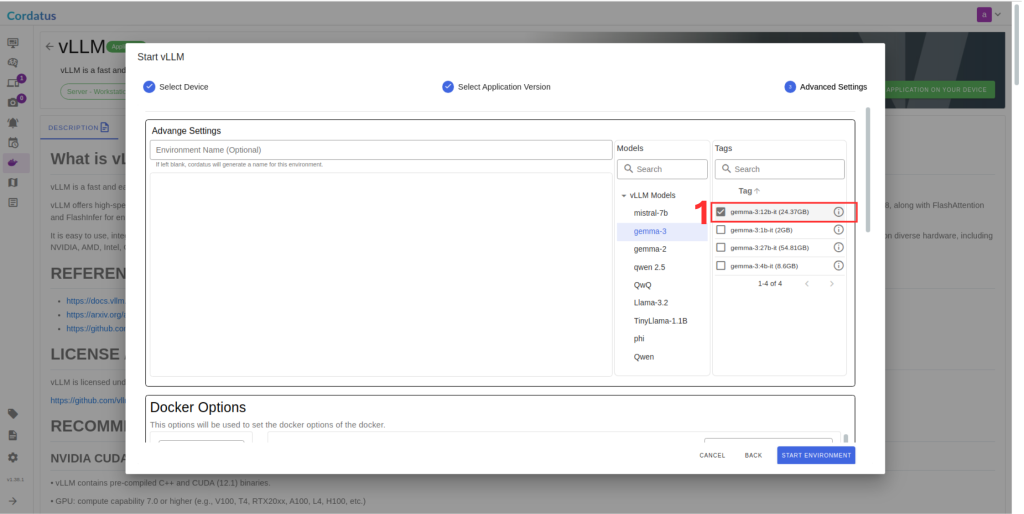

6. Ensure the correct model is selected in Box 1.

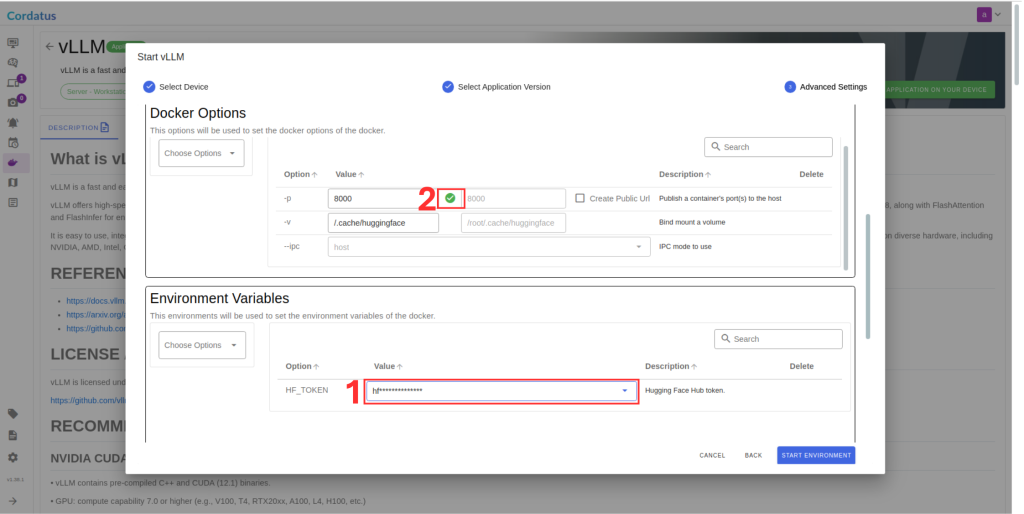

7. Set Hugging Face token in Box 1 if required by the model.

8. Click Save Environment to apply the settings.

Once these steps are completed, the model will run automatically, and you can access it through the assigned port.