Kimi-K2: The Open-Source AI That Outperforms GPT-4 in Critical Skills

What is the Kimi-K2-Instruct Model?

Kimi-K2 is a large scale language model engineered for exceptional agentic capabilities, meaning it can autonomously use tools, execute code, and perform complex multi-step tasks. Its open weight nature allows anyone to download, use, and modify the model freely.

Kimi-K2 is a breakthrough in artificial intelligence a 1 trillion parameter open-weight model that delivers state-of-the-art performance on reasoning, coding, and tool use tasks. Developed by Moonshot AI, Kimi-K2 leverages a Mixture-of-Experts (MoE) architecture and innovative training methods to rival the capabilities of leading closed-source models like GPT-4 and Claude, making top-tier AI accessible to all.

How Does Kimi-K2 Work?

Kimi-K2’s exceptional performance is driven by its unique architecture and training methodology.

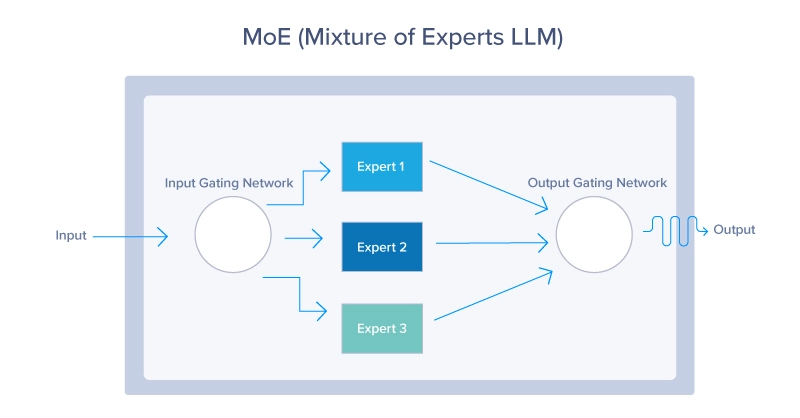

Mixture-of-Experts (MoE) Architecture: Kimi-K2 is composed of 384 specialized “expert” sub-models. For any given input, the model intelligently routes information through just 8 experts, making it incredibly efficient without sacrificing the power of its 1-trillion-parameter scale.

Agentic Intelligence by Design: Unlike models that only learn from text, Kimi-K2 was explicitly trained to act. Through a process called Large-Scale Agentic Data Synthesis, it “practiced” using tools like web browsers and code interpreters, learning to create and execute multi-step plans to solve complex problems.

Advanced Training and Optimization: The model was trained using the cutting-edge MuonClip optimizer, which enabled stable training at an unprecedented scale. This was followed by reinforcement learning and self-critique, allowing the model to refine its own outputs against success criteria.

Benefits and Use Cases

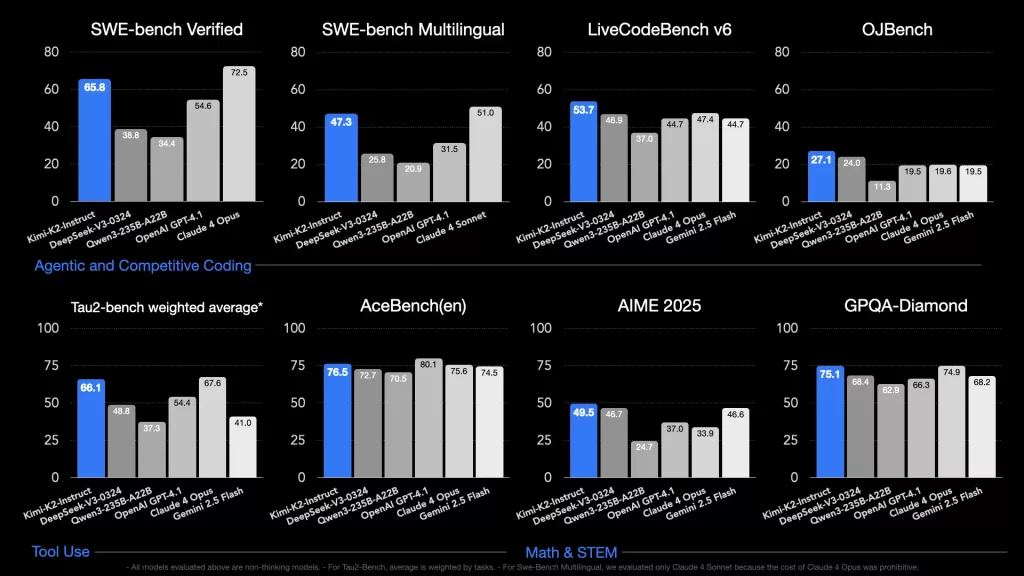

Superior Performance: Kimi-K2 matches or exceeds proprietary models on critical benchmarks. It scores 53.7% on LiveCodeBench (surpassing GPT-4.1) and 65.8% on SWE-bench with tools. It also achieves remarkable accuracy on mathematical reasoning, with a 69.6% average on AIME 2024 and 97.4% on MATH-500. You can see the benchmarks below.

Cost-Effective Power: As an open weight model, Kimi-K2 offers a powerful alternative to expensive, closed-source APIs. While API access through platforms like Together AI still has a cost, it is dramatically lower than competitors. This makes high-end AI accessible for developers, researchers, and startups.

Applications:

- Software Development: Excels at writing, debugging, and optimizing code.

- Data Analysis: Performs complex data manipulation and visualization tasks.

- Mathematical Problem-Solving: Tackles advanced, competition-level math problems.

- Tool Integration & Automation: Seamlessly works with external tools and APIs to execute workflows.

Key Questions & Answers

How does Kimi-K2 compare to GPT-4 and Claude?

Kimi-K2 demonstrates comparable or superior performance to models like GPT-4.1 and Claude Opus in coding, math, and tool-use benchmarks. While closed models may have an edge in general knowledge, Kimi-K2’s open nature and specialized training make it a powerful, cost-effective alternative.

What hardware is needed to run Kimi-K2 locally?

Running the full 1-terabyte model requires significant hardware, such as systems with multiple high-end GPUs or specialized hardware like Apple’s M3 Ultra with vast amounts of unified memory. For most users, accessing the model via cloud-based inference providers is the most practical solution.

Can Kimi-K2 be used for commercial projects?

Yes. It is released under a Modified MIT License, which generally permits commercial use. This allows businesses to build and deploy applications using Kimi-K2 without the restrictive licensing or high costs of proprietary models.

What makes its “agentic” capabilities special?

Its agentic skills come from explicit training to use tools and act, not just generate text. It can create a plan, execute it, and adjust based on the results, making it ideal for tasks that require genuine problem-solving.

Conclusion

Kimi-K2 represents a major milestone in the democratization of AI. By combining a massive 1-trillion-parameter scale with an efficient Mixture-of-Experts design and open-weight access, it proves that world-class performance does not need to be locked behind a paywall. Its exceptional agentic capabilities in coding, reasoning, and tool use make it a powerful asset for developers and researchers.

As the AI landscape evolves, models like Kimi-K2 are closing the gap between proprietary and open-source ecosystems, creating the way for a new wave of innovation built on accessible, transparent, and powerful technology.

Ready to explore? Visit the official GitHub repository or try it on platforms like Hugging Face.

How to run Kimi-K2-Instruct on Cordatus.ai ?

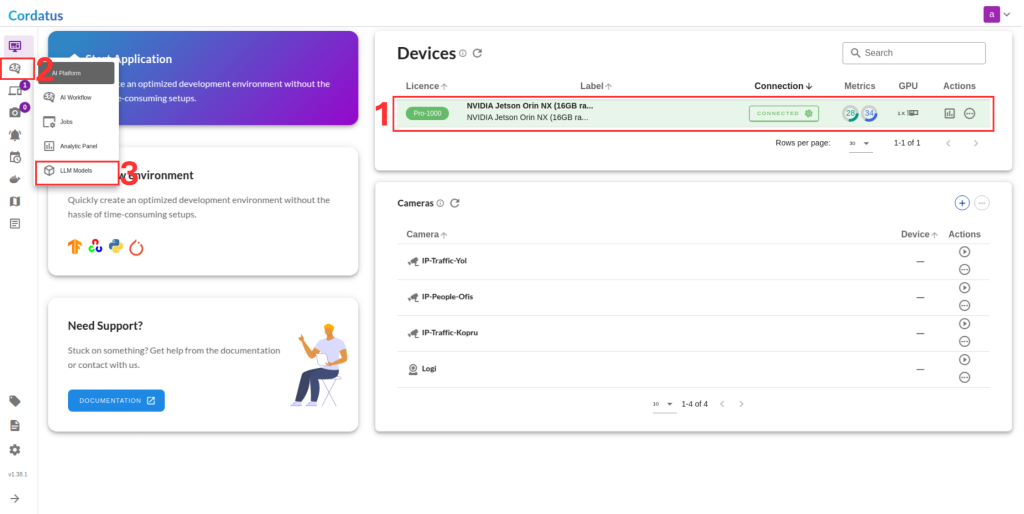

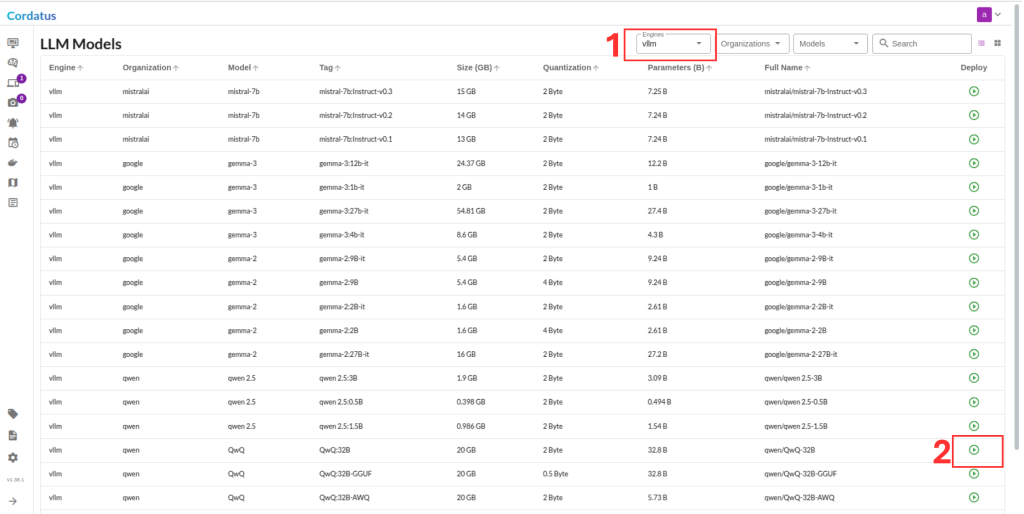

1. Connect to your device and select LLM Models from the sidebar.

2. Select vLLM from the model selector menu (Box1), choose your desired model, and click the Run symbol (Box2).

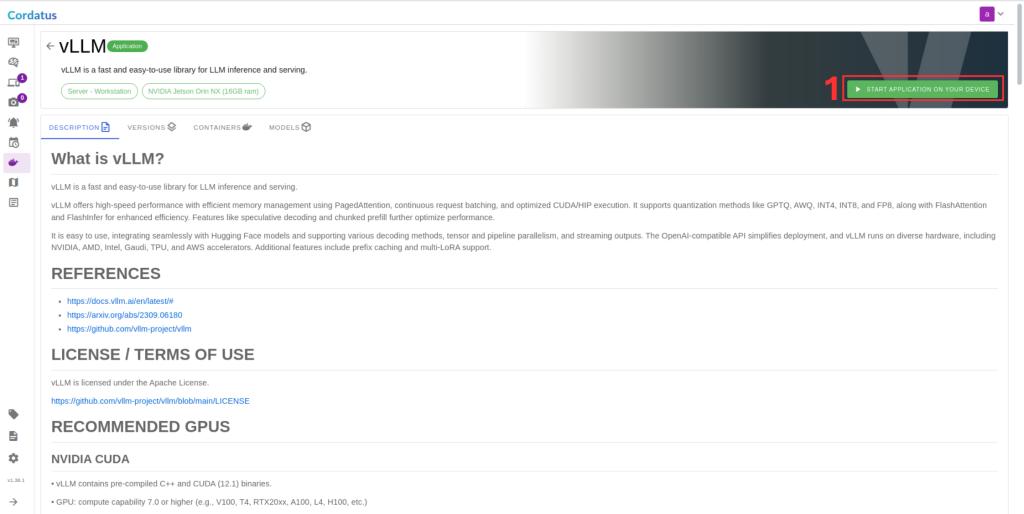

3. Click Run to start the model deployment.

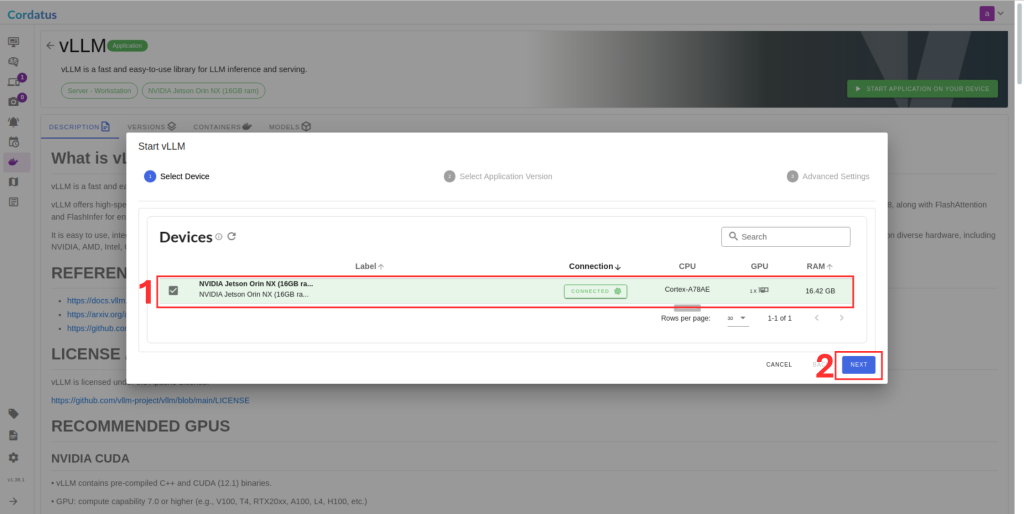

4. Select the target device where the LLM will run.

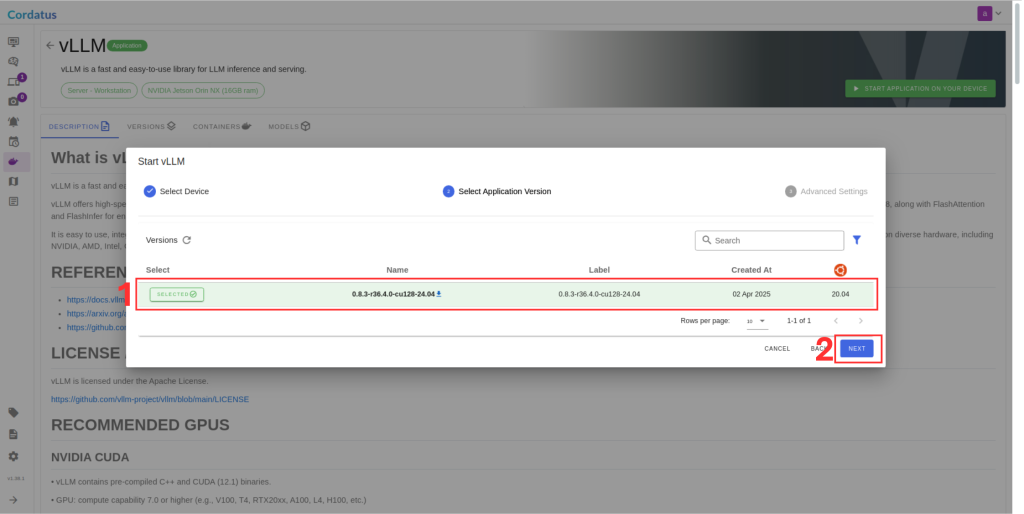

5. Choose the container version (if you have no idea select the latest).

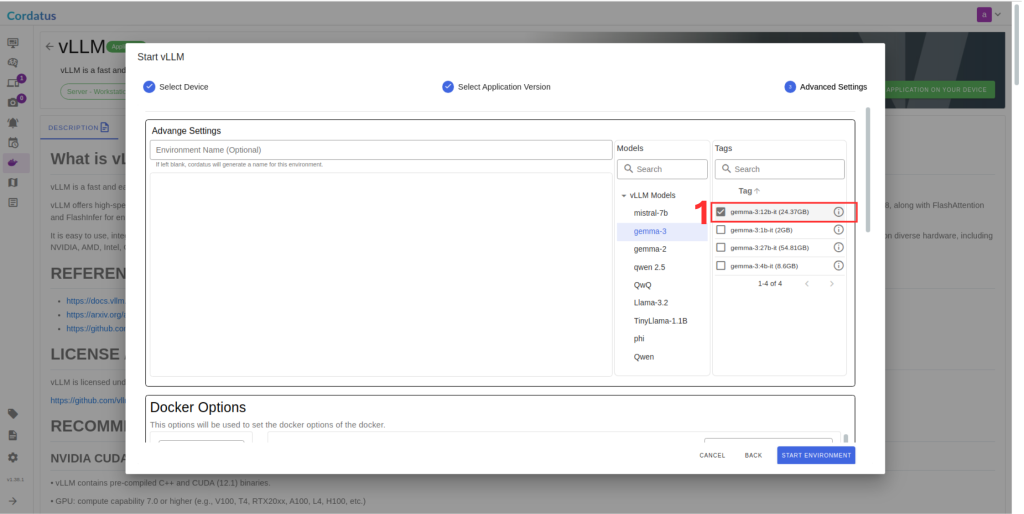

6. Ensure the correct model is selected in Box 1.

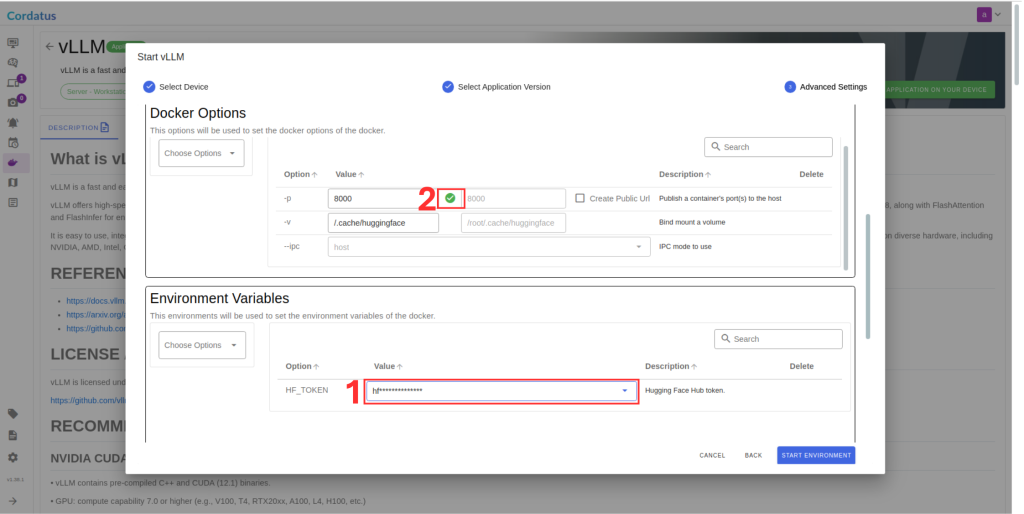

7. Set Hugging Face token in Box 1 if required by the model.

8. Click Save Environment to apply the settings.

Once these steps are completed, the model will run automatically, and you can access it through the assigned port.