LFM2: The Fastest On-Device Foundation Model

What is LFM2?

LFM2 is Liquid AI’s breakthrough foundation model designed specifically for edge computing and on-device deployment. Unlike traditional AI models that require powerful cloud servers, LFM2 runs efficiently on smartphones, laptops, and embedded systems while delivering cloud-level performance.

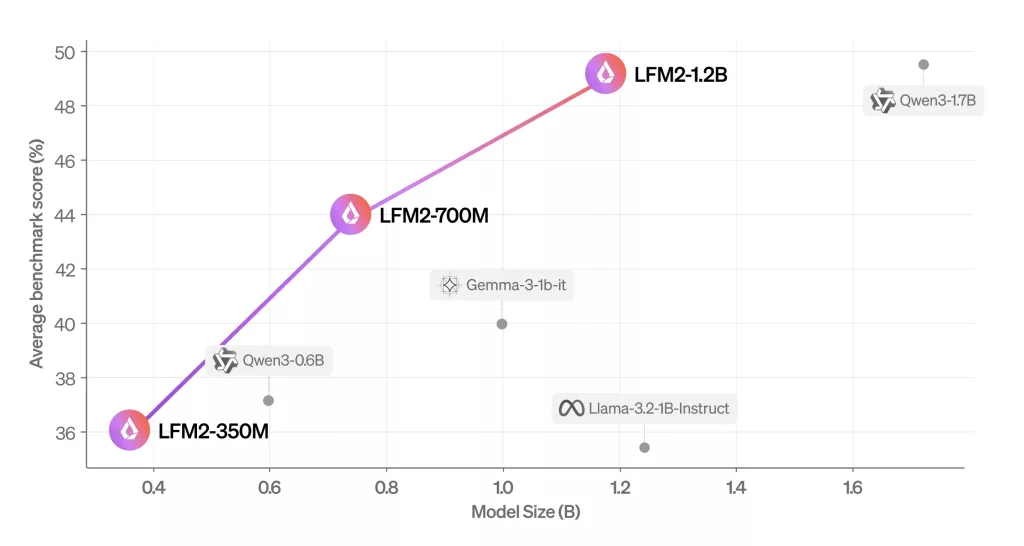

The model is available in three sizes (350M, 700M, and 1.2B parameters), LFM2 is optimized for maximum performance with minimal memory and energy consumption.

How Does LFM2 Work?

LFM2 operates on a revolutionary hybrid architecture that combines the best of two worlds: convolutional neural networks and attention mechanisms. LFM2 is a hybrid of convolution and attention blocks. There are 16 blocks in total, of which 10 are double-gated short-range LIV convolutions and 6 grouped query attention (GQA) blocks.

The magic happens through Linear Input-Varying (LIV) operators, which create weights dynamically based on the input they’re processing. This approach allows the model to adapt its behavior in real-time, making it incredibly efficient for diverse tasks while maintaining compact size.

What Makes LFM2 Stand Out? Why Should You Use It?

STAR Neural Architecture Search: LFM2’s optimal architecture was autonomously discovered by Liquid AI’s proprietary STAR engine, perfectly balancing quality, memory usage, and speed for edge deployment.

Linear Input-Varying (LIV) Operators: The LFM2 convolution blocks use multiplicative gates and short convolutions to create dynamic, input-driven weights. These LIV operators enable real-time adaptation while keeping the model efficient.

Multiplicative Gates Technology: The 10 double-gated short convolution blocks use adaptive gates to efficiently filter relevant information while minimizing memory use.

Knowledge Distillation Training: LFM2 is trained using the larger LFM1-7B model as a teacher over 10T tokens, enabling smaller models to reach performance levels of much bigger ones.

Key Performance Benefits

Advanced Training Pipeline: LFM2 employs a sophisticated three-stage training process: knowledge distillation from LFM1-7B, large-scale supervised fine-tuning on downstream tasks, and custom Direct Preference Optimization with semi-online data generation.

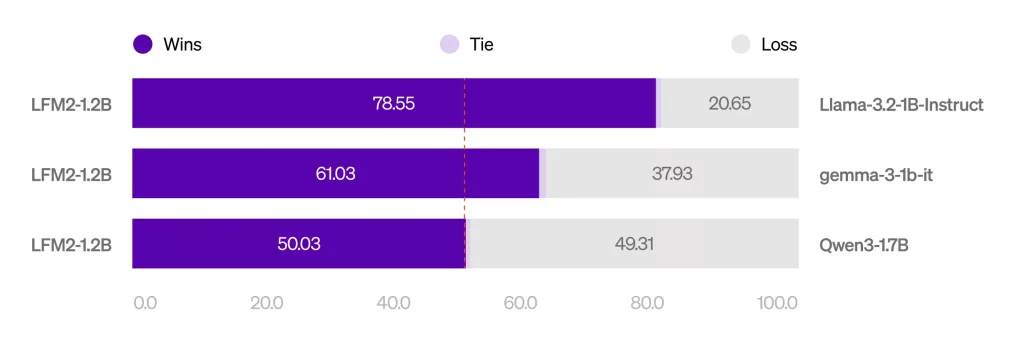

Multi-Hardware Optimization: Built on a hybrid architecture, LFM2 delivers 200% faster decode and prefill performance than Qwen3 and Gemma 3 on CPU while efficiently running on GPU and NPU hardware with the same codebase.

Agent-Optimized Design: It also significantly outperforms models in each size class on instruction-following and function calling—the core capabilities that make LLMs reliable for building AI agents.

Selective Activation: Unlike traditional models that activate all parameters, LFM2’s architecture selectively engages only the necessary computational units for each task, dramatically reducing energy consumption.

Hybrid Architecture in LFM2

The core innovation in LFM2 lies in its hybrid architecture that leverages Liquid Time-constant Networks (LTCs). This represents a fundamental departure from traditional transformer-based models:

16-Block Hybrid Structure:

- 10 Double-Gated Short-Range LIV Convolution Blocks: Handle local pattern recognition with adaptive multiplicative gates

- 6 Grouped Query Attention (GQA) Blocks: Manage longer-range dependencies and context understanding

Unlike traditional Transformer-based models, LFM2 adopts an innovative structured adaptive operator architecture that significantly improves training efficiency and inference speed, especially excelling in long context and resource-constrained scenarios.

Conclusion

LFM2 is a major advancement in edge AI, offering high performance, fast speed, and broad hardware support. It delivers cloud-level AI entirely on-device, meeting demands for privacy and low latency. Ideal for developers building smart devices or AI-powered apps, LFM2 is the perfect edge AI foundation.

How to run LFM2 on Cordatus.ai ?

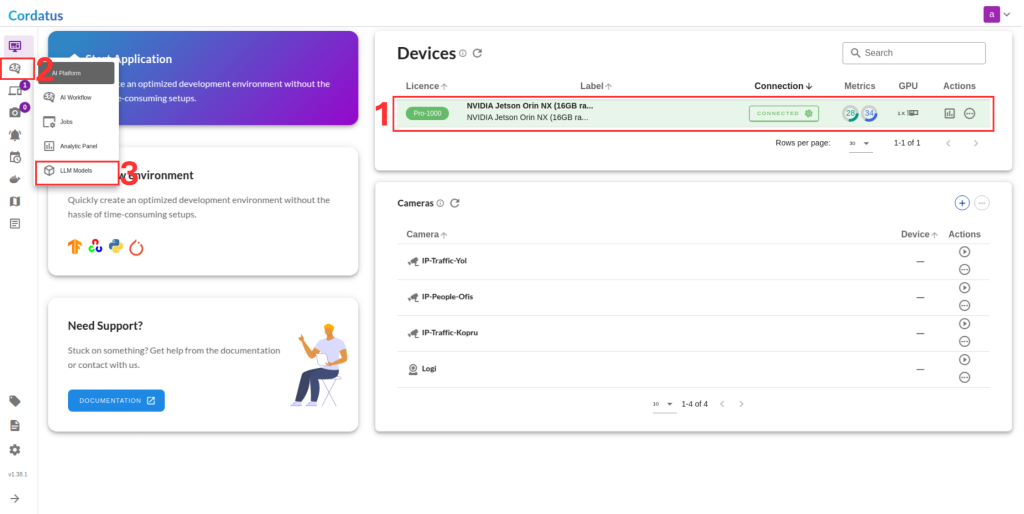

1. Connect to your device and select LLM Models from the sidebar.

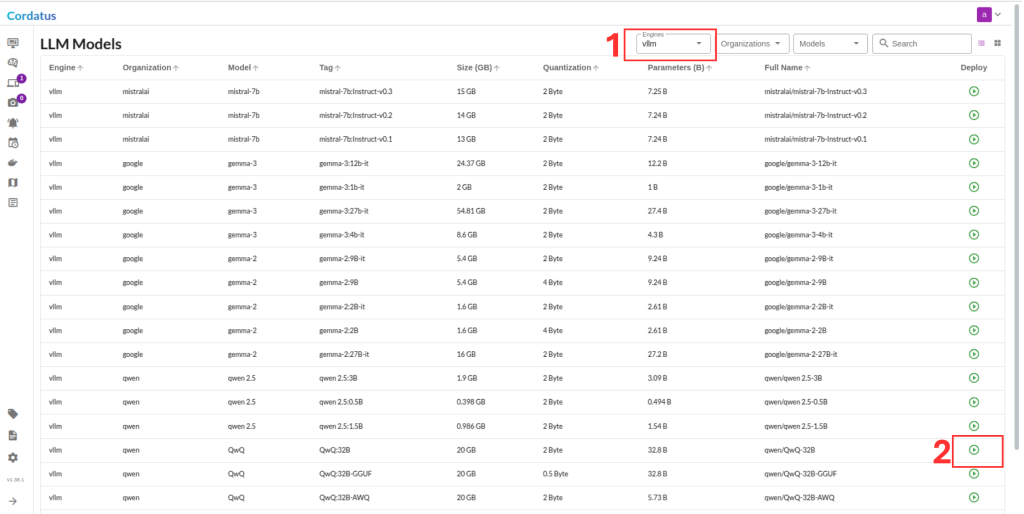

2. Select vLLM from the model selector menu (Box1), choose your desired model, and click the Run symbol (Box2).

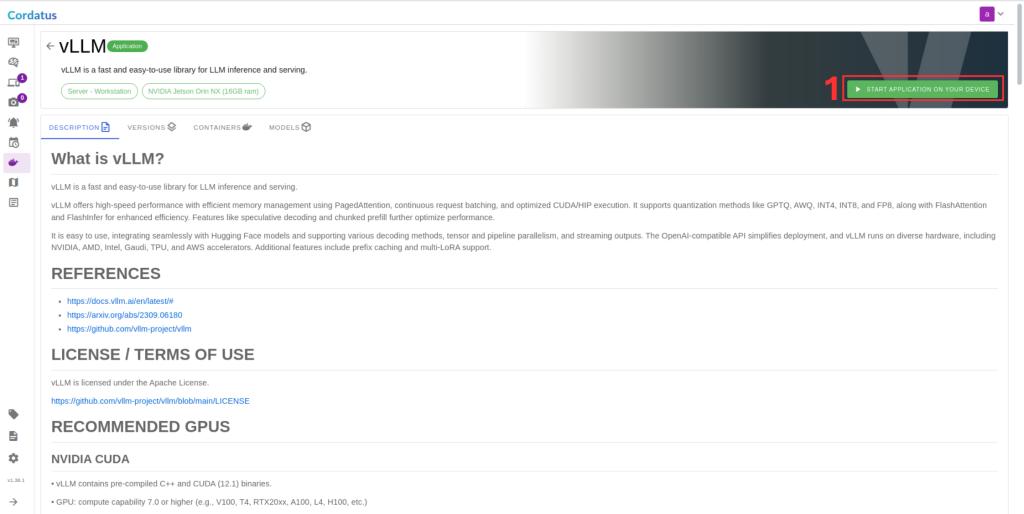

3. Click Run to start the model deployment.

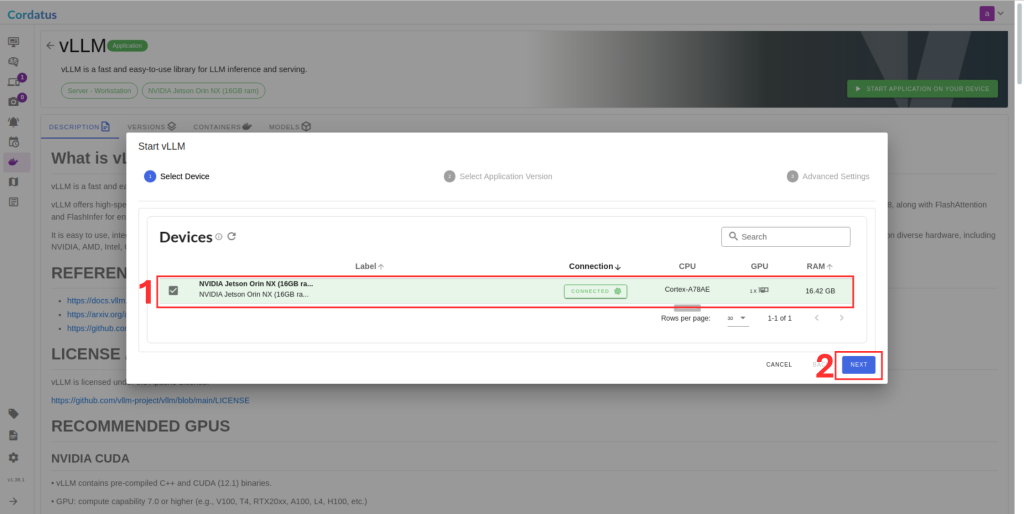

4. Select the target device where the LLM will run.

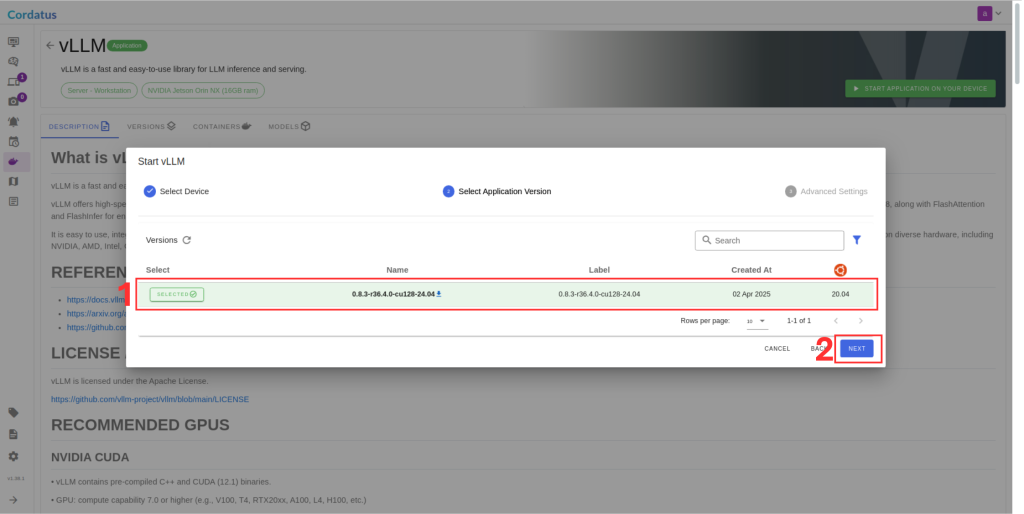

5. Choose the container version (if you have no idea select the latest).

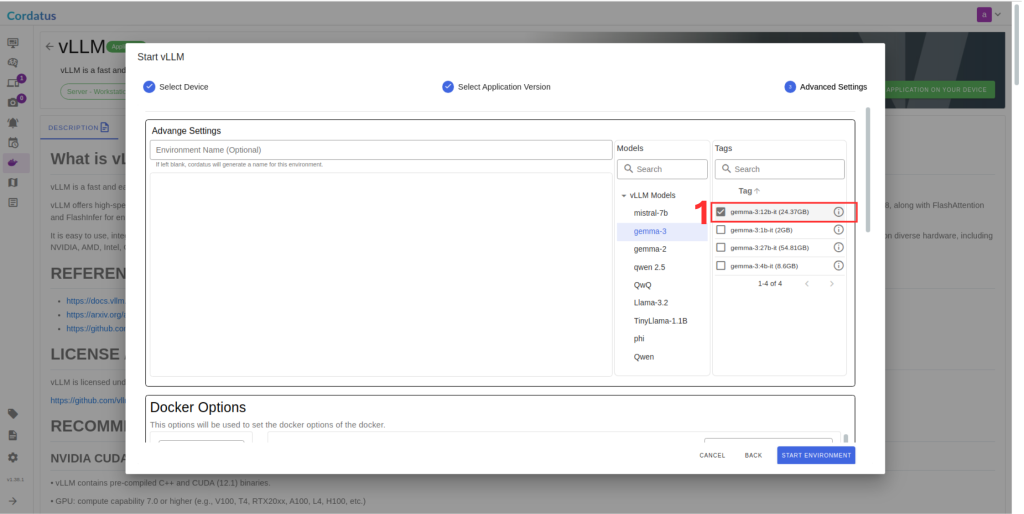

6. Ensure the correct model is selected in Box 1.

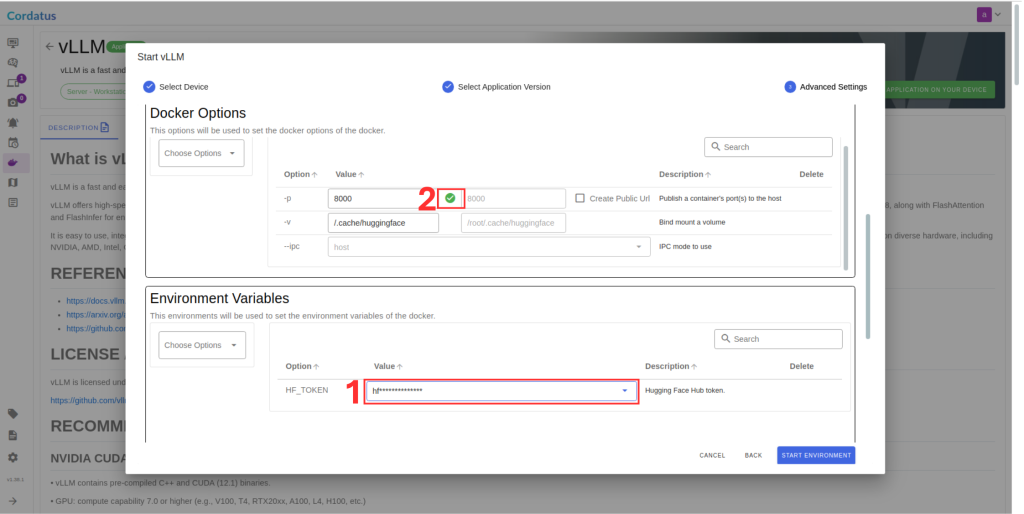

7. Set Hugging Face token in Box 1 if required by the model.

8. Click Save Environment to apply the settings.

Once these steps are completed, the model will run automatically, and you can access it through the assigned port.