MedGemma: Google’s Revolutionary AI Model for Medical Text and Image Analysis

What is MedGemma?

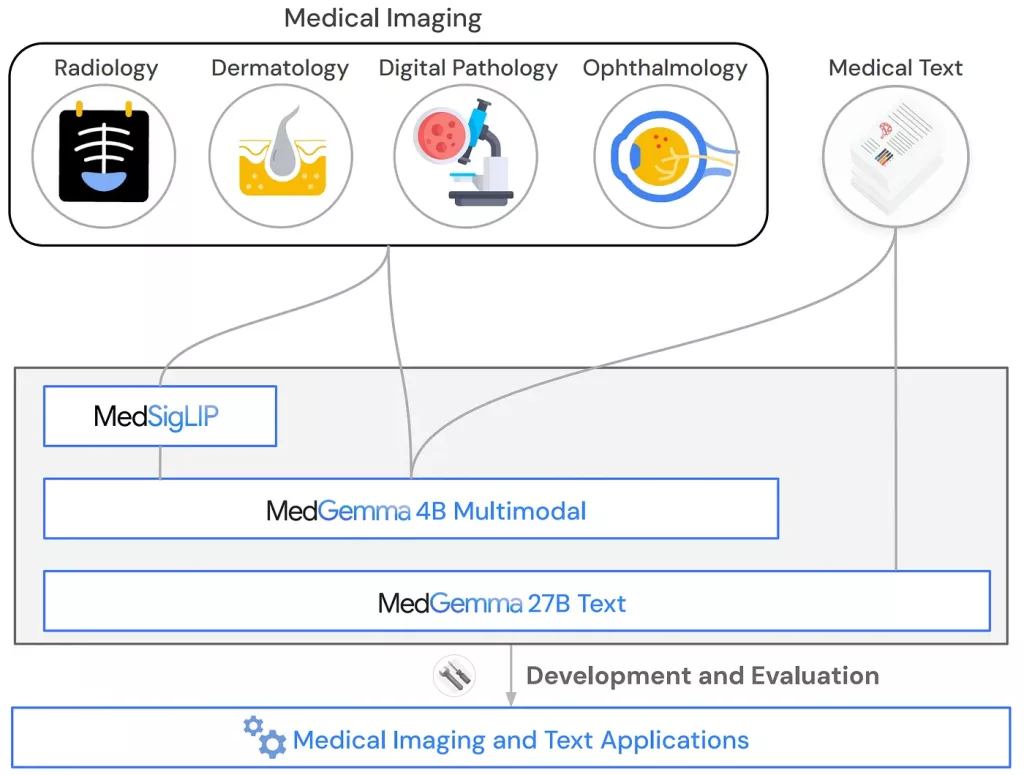

MedGemma is a collection of specialized AI models developed by Google DeepMind, designed specifically for medical text and image analysis. Built on the powerful Gemma 3 architecture, it comes in two variants that cater to different medical AI needs.

The MedGemma 4B multimodal model processes both medical images and text using 4 billion parameters. It includes a SigLIP image encoder that’s been pre-trained on diverse medical images including chest X-rays, dermatology images, ophthalmology scans, and histopathology slides.

The MedGemma 27B text-only model focuses exclusively on medical text comprehension with 27 billion parameters. This larger model excels at clinical reasoning, medical question answering, and complex medical text analysis tasks.

Both models are available as pre-trained versions for experimentation and instruction-tuned versions optimized for practical applications.

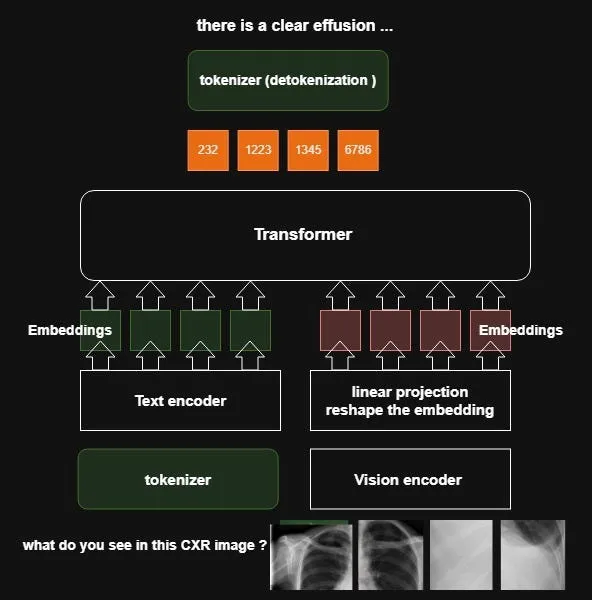

How Does MedGemma Work?

MedGemma operates using advanced transformer architecture specifically optimized for medical contexts. The multimodal 4B model combines text processing capabilities with visual understanding through its specialized image encoder.

When processing medical images, MedGemma first converts images to a standard 896×896 resolution and encodes them into 256 tokens. The model then analyzes these visual tokens alongside text input to provide comprehensive medical insights.

For text-only tasks, the 27B model leverages its extensive medical training to understand clinical terminology, drug interactions, diagnostic procedures, and treatment protocols. The model uses grouped-query attention (GQA) mechanisms and supports context lengths of at least 128K tokens.

Both models employ test-time scaling techniques to improve performance on complex medical reasoning tasks, making them more reliable for critical healthcare applications.

Customizing MedGemma

Developers can improve MedGemma in several ways. You can teach it new tasks with examples, train it on your own medical data, or connect it with other medical tools and databases. Google provides guides and examples to help with these customizations.

Key Advantages Over Generic AI Models:

- Purpose-Built: MedGemma is built from the ground up for medical use, unlike general models adapted for healthcare, leading to better understanding of medical language and reasoning.

- Specialized Training: Trained solely on medical data and imagery, MedGemma delivers more accurate insights with fewer hallucinations than broadly trained models.

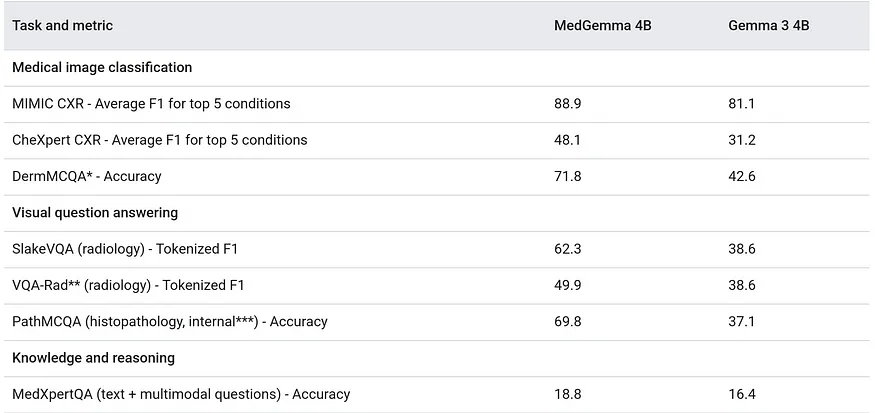

- Superior Performance: Outperforms base Gemma models across all medical benchmarks

Multimodal Capabilities: Processes both text and images in medical contexts

Efficient and Scalable: Runs on modest hardware (4B) or scales to complex reasoning (27B), unlike generic models that require heavy resources without medical focus. - Open Access: Available via Hugging Face and Google Cloud with no lock-in, MedGemma offers flexibility, transparency, and easy integration.

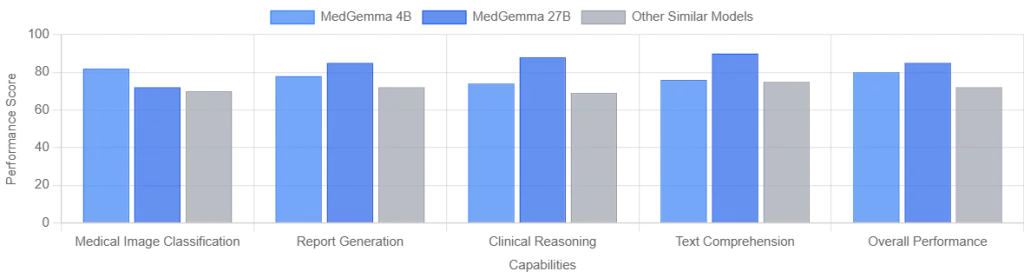

Performance and Results

MedGemma significantly outperforms regular AI models on medical tasks. For medical image analysis, it scores 88.9 compared to regular models that only score 81.1. This 7-point improvement makes a real difference in healthcare applications.

The same improvements show up in text-based medical tasks. MedGemma consistently beats regular AI when answering medical questions, understanding clinical notes, and helping with diagnoses.

Conclusion

MedGemma is a game-changer for medical AI, offering the first truly medical-focused AI system that understands both images and text. While it needs careful testing and customization before use in hospitals, its superior performance over regular AI models makes it essential for serious healthcare applications.

For healthcare organizations ready to embrace AI, MedGemma provides the specialized foundation needed to build solutions that could genuinely improve patient care and make medical work more efficient.

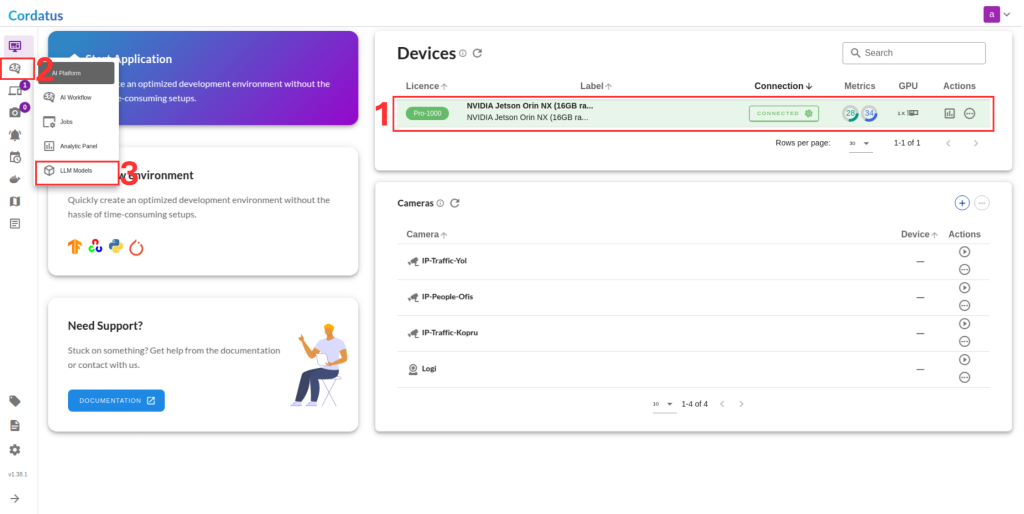

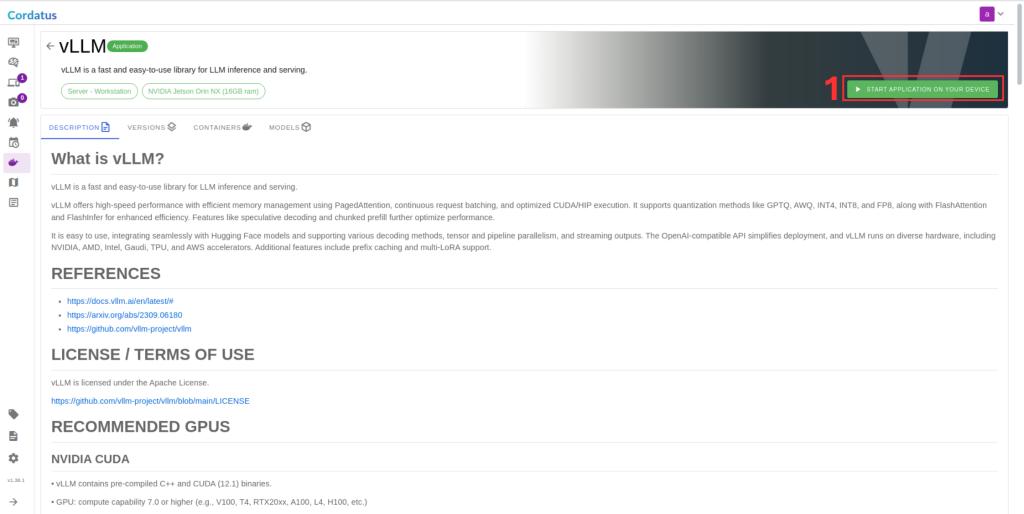

How to run MedGemma on Cordatus.ai ?

1. Connect to your device and select LLM Models from the sidebar.

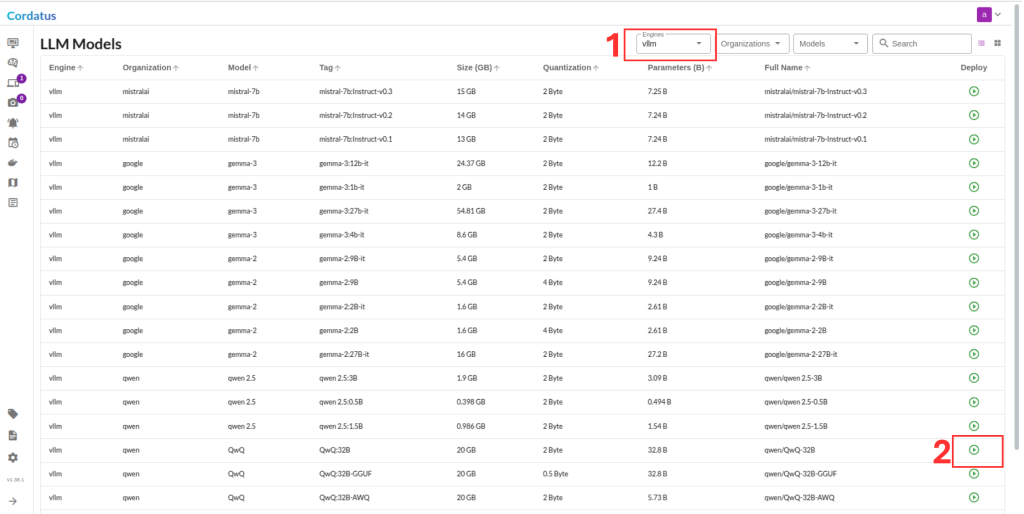

2. Select vLLM from the model selector menu (Box1), choose your desired model, and click the Run symbol (Box2).

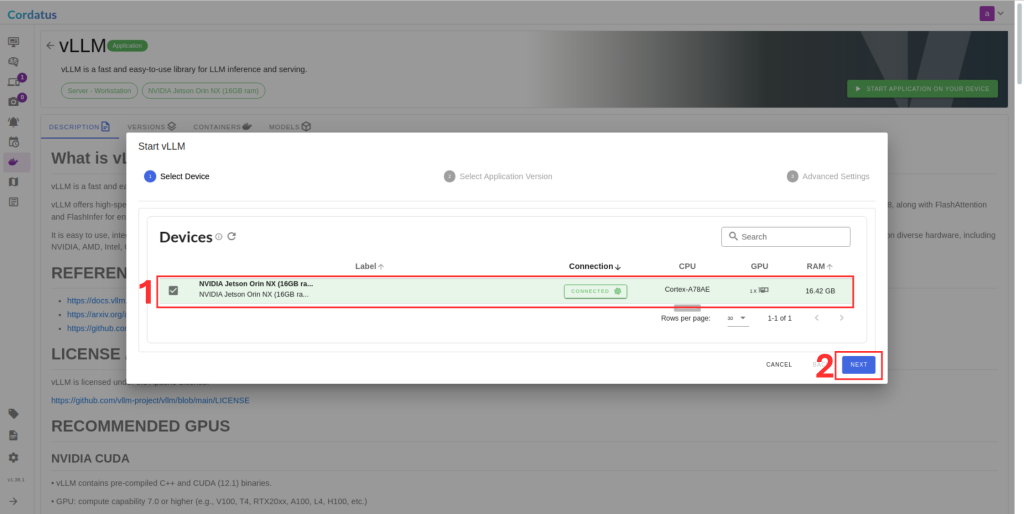

3. Click Run to start the model deployment.

4. Select the target device where the LLM will run.

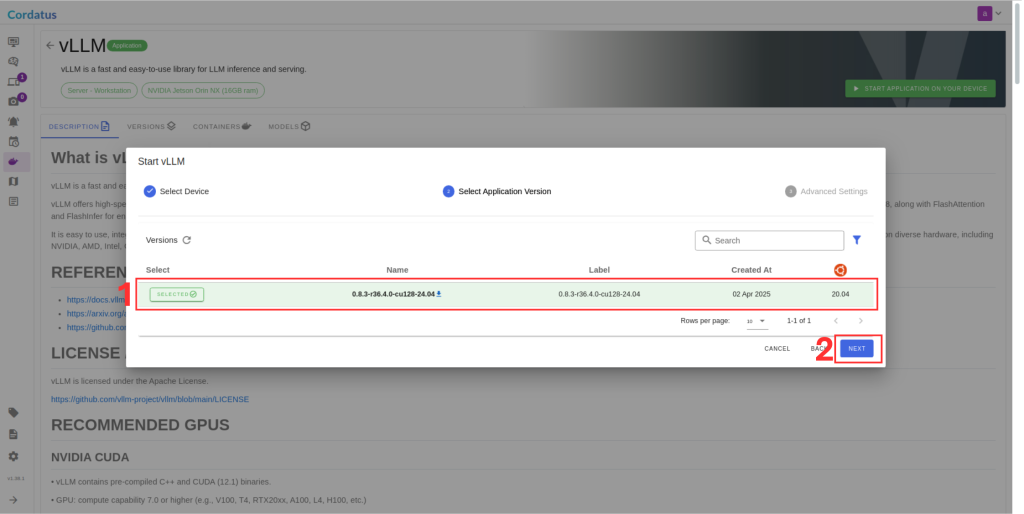

5. Choose the container version (if you have no idea select the latest).

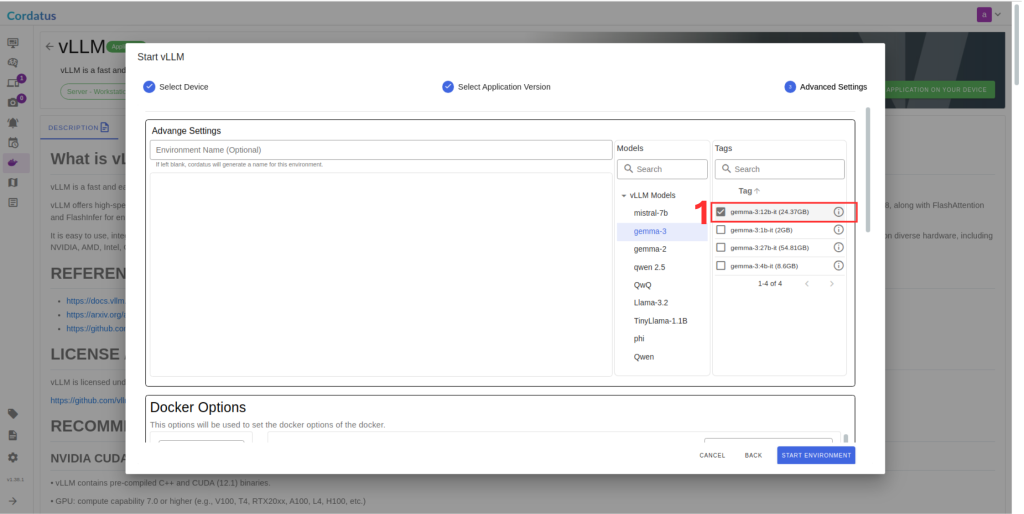

6. Ensure the correct model is selected in Box 1.

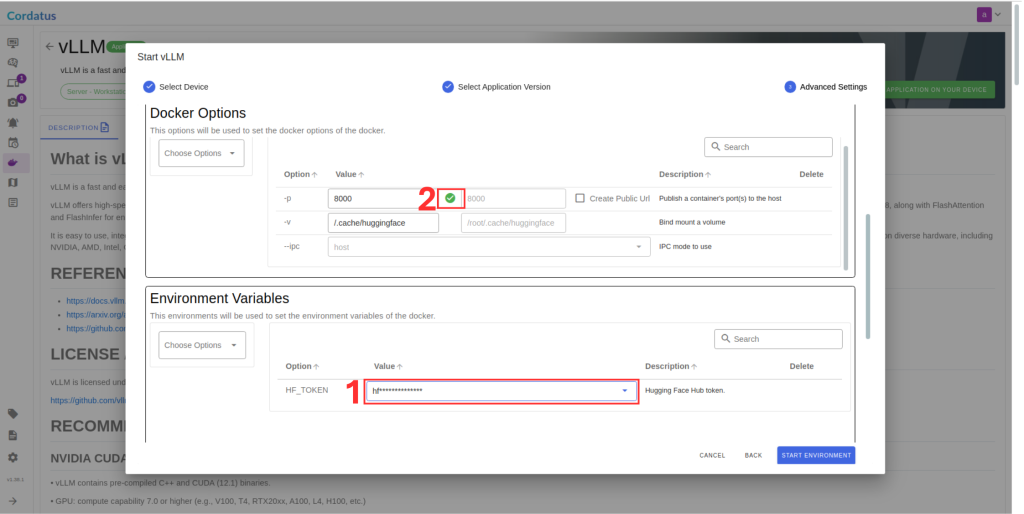

7. Set Hugging Face token in Box 1 if required by the model.

8. Click Save Environment to apply the settings.

Once these steps are completed, the model will run automatically, and you can access it through the assigned port.