Microsoft Phi-4: The Compact AI Model Revolutionizing Mathematical Reasoning

What is Microsoft Phi-4?

Microsoft Phi-4 is a state-of-the-art small language model (SLM) designed for high-quality reasoning with remarkable efficiency. Unlike traditional large language models requiring massive computational resources, Phi-4 achieves impressive performance with just 14 billion parameters.

Microsoft Phi-4 represents a breakthrough in artificial intelligence efficiency – a 14-billion parameter small language model that outperforms much larger AI models in mathematical reasoning through innovative design and quality training approaches. This efficient AI model leverages synthetic data training and advanced optimization techniques to deliver performance comparable to massive models while using significantly fewer computational resources, making advanced AI capabilities more accessible and cost-effective for developers and organizations worldwide.

How Does Phi-4 Work?

Phi-4’s exceptional performance stems from three revolutionary approaches:

Synthetic Data-Driven Training: Uses carefully crafted synthetic datasets emphasizing reasoning and problem-solving through multi-agent prompting, self-revision workflows, and instruction reversal techniques.

Quality Over Quantity Approach: Meticulously curated organic data from academic papers, educational forums, and programming tutorials, filtered using specialized classifiers.

Advanced Post-Training Techniques:

- Direct Preference Optimization (DPO) for human preference alignment

- Pivotal Token Search identifying critical decision points in reasoning chains

- Supervised Fine-Tuning (SFT) with curated datasets

Benefits and Use Cases

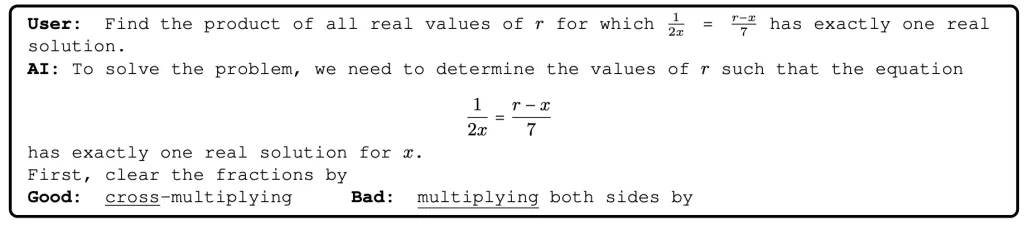

Mathematical Excellence: Phi-4 outperforms much larger models on mathematical reasoning. On the AMC, it scored 91.8 points average, higher than both Gemini Pro 1.5 and Llama-3.3-70B. The model achieved 80.4% accuracy on the MATH benchmark, surpassing GPT-4o at 74.6%. It also demonstrated superior performance on GPQA with 56.1% compared to GPT-4o’s 50.6%, and achieved 82.6% accuracy on HumanEval coding tasks.

Resource Efficiency: Phi-4 offers significant advantages in computational efficiency, delivering lower operational costs and faster response times compared to larger models. Its compact design requires reduced infrastructure requirements, making it particularly suitable for edge deployment and mobile systems where computational resources are limited.

Applications: The model excels in educational technology platforms where mathematical reasoning is crucial. It powers automated tutoring systems and enhances STEM learning applications with its strong problem-solving capabilities. Additionally, Phi-4 serves as an excellent foundation for AI-powered development features across various software applications.

Key Questions & Answers

How does Phi-4 compare to larger models?

Phi-4 often matches or exceeds larger models on reasoning tasks, particularly mathematics and logic. However, it has limitations in broad general knowledge and factual recall compared to larger models, deliberately prioritizing responsible behavior over attempting questions outside its knowledge domain.

What makes Phi-4’s training unique?

Approximately 40% of training tokens come from synthetic data (vs. typical web-scraped content). Uses sophisticated generation techniques including multi-agent prompting and instruction reversal. Employs novel Pivotal Token Search to reinforce critical reasoning decision points.

Conclusion

Microsoft Phi-4 represents a significant AI milestone, proving thoughtful design and quality training data deliver exceptional results without massive computational overhead. For developers and organizations seeking advanced reasoning capabilities, Phi-4 offers compelling performance, efficiency, and accessibility.

The focus on efficiency without sacrificing capability makes it valuable for organizations with limited computational resources or requiring real-time AI responses, paving the way for more sustainable and practical AI deployment.

Ready to explore? Visit Microsoft Azure AI Foundry or Hugging Face to start experimenting with this revolutionary AI approach.

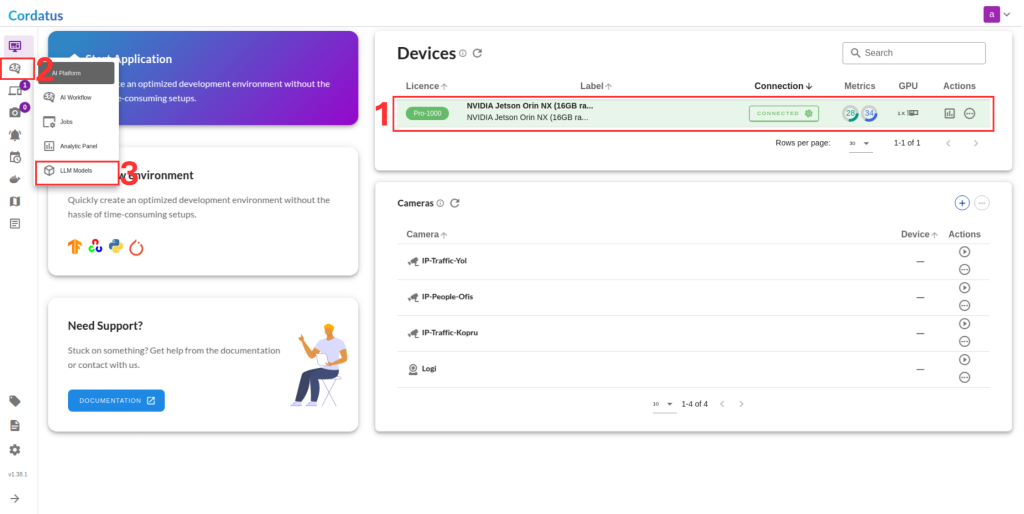

How to run Phi-4 on Cordatus.ai ?

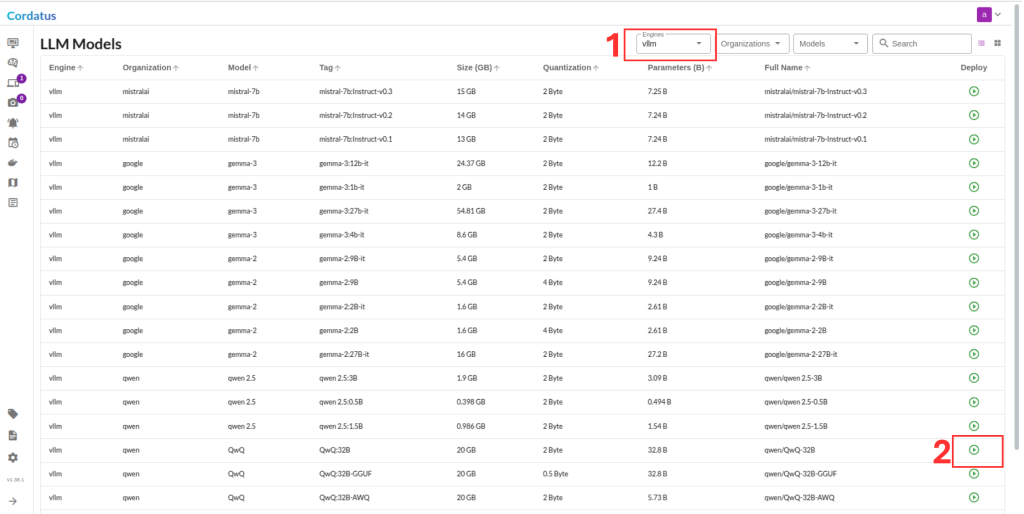

1. Connect to your device and select LLM Models from the sidebar.

2. Select vLLM from the model selector menu (Box1), choose your desired model, and click the Run symbol (Box2).

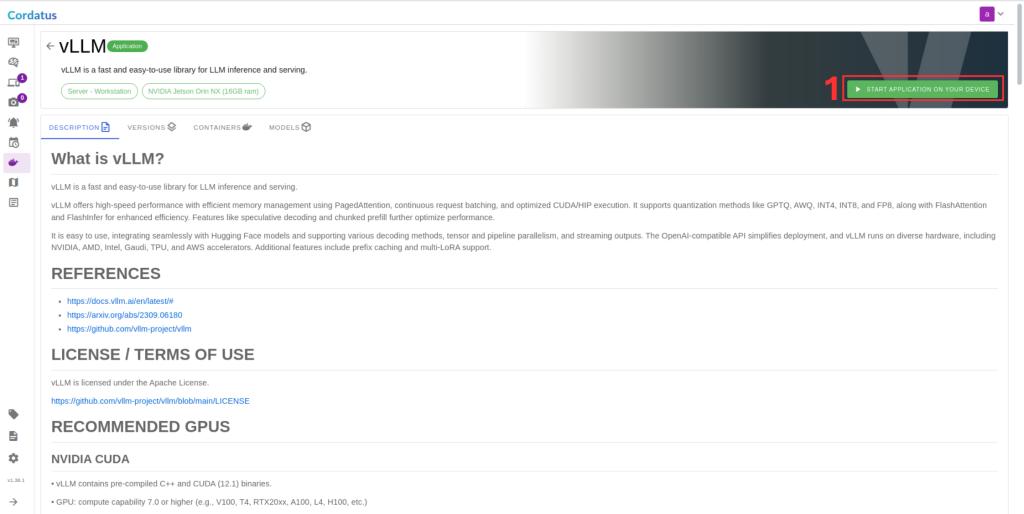

3. Click Run to start the model deployment.

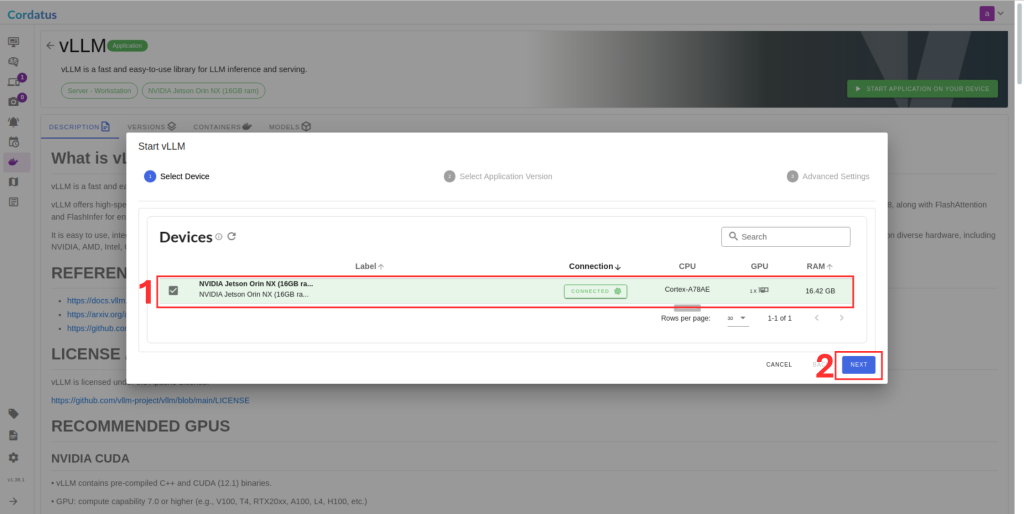

4. Select the target device where the LLM will run.

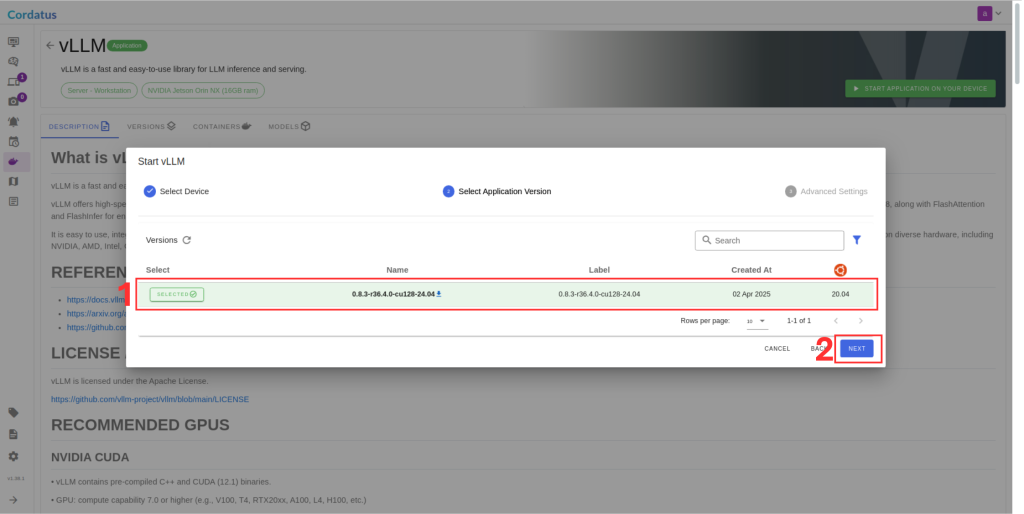

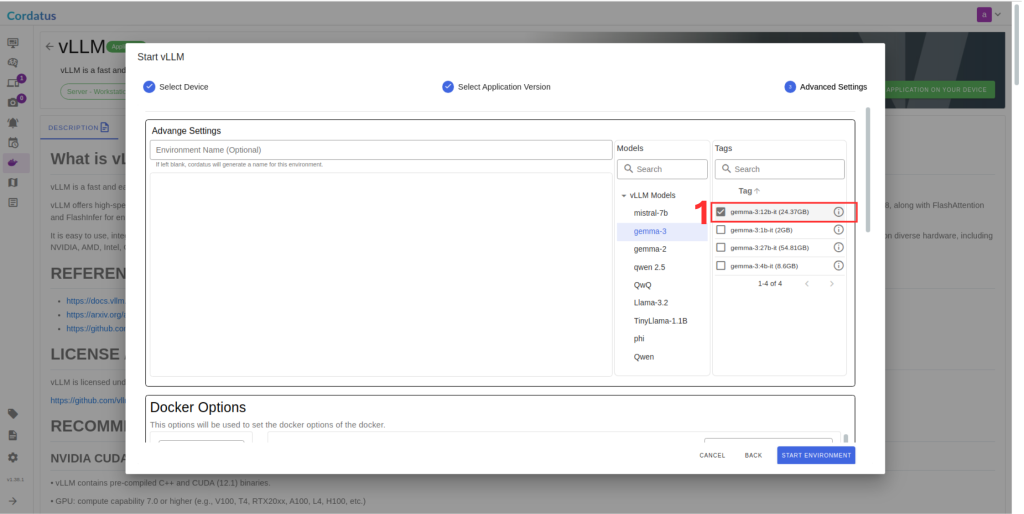

5. Choose the container version (if you have no idea select the latest).

6. Ensure the correct model is selected in Box 1.

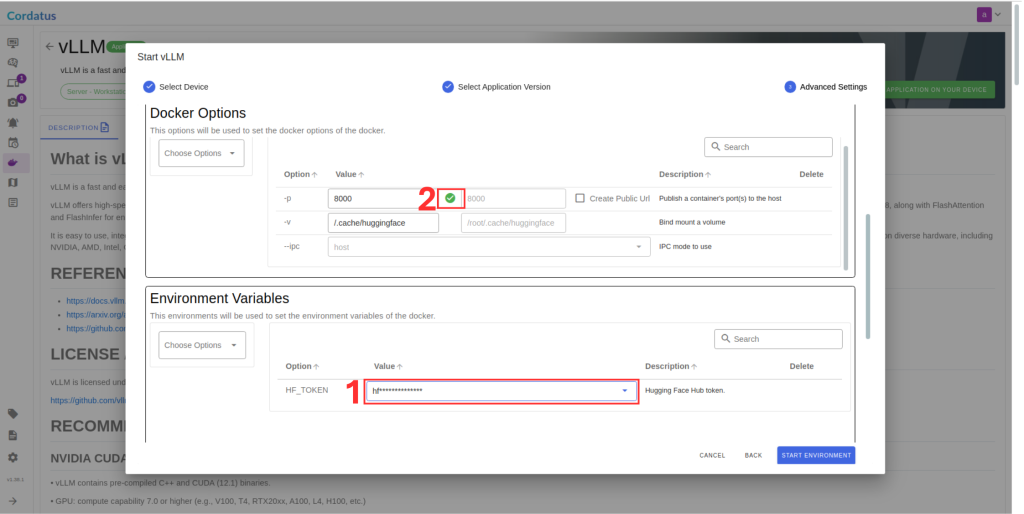

7. Set Hugging Face token in Box 1 if required by the model.

8. Click Save Environment to apply the settings.

Once these steps are completed, the model will run automatically, and you can access it through the assigned port.