SmolLM3-3B: The Small Language Model That Outperforms Its Class with Hybrid Reasoning

SmolLM3-3B is a revolutionary 3 billion parameter open-source language model that pushes the boundaries of what small models can achieve. Developed by Hugging Face, SmolLM3 delivers state-of-the-art performance in its size class while supporting dual mode reasoning, multilingual capabilities, and long context processing up to 128K tokens. This fully open model proves that cutting-edge AI capabilities don’t require massive parameter counts, making advanced AI accessible to developers with limited resources.

What is SmolLM3-3B?

SmolLM3-3B is a compact yet powerful language model engineered to deliver exceptional performance while maintaining efficiency. Its design philosophy centers on maximizing capabilities within a small parameter footprint, making it ideal for deployment on resource-constrained devices while still competing with much larger models.

Key Specifications:

- Total Parameters: 3 billion

- Architecture: Decoder-only transformer with GQA and NoPE (3:1 ratio)

- Context Length: 64K tokens (extendable to 128K with YARN)

- Training Data: 11.2 trillion tokens

- License: Apache 2.0 (Fully Open-Source)

- Languages: 6 natively supported (English, French, Spanish, German, Italian, Portuguese)

Special Features: Hybrid reasoning with extended thinking mode

Hardware Requirements: Can run on consumer GPUs

How Does SmolLM3-3B Work?

SmolLM3’s exceptional performance comes from its innovative architecture and training methodology that maximizes efficiency without sacrificing capability.

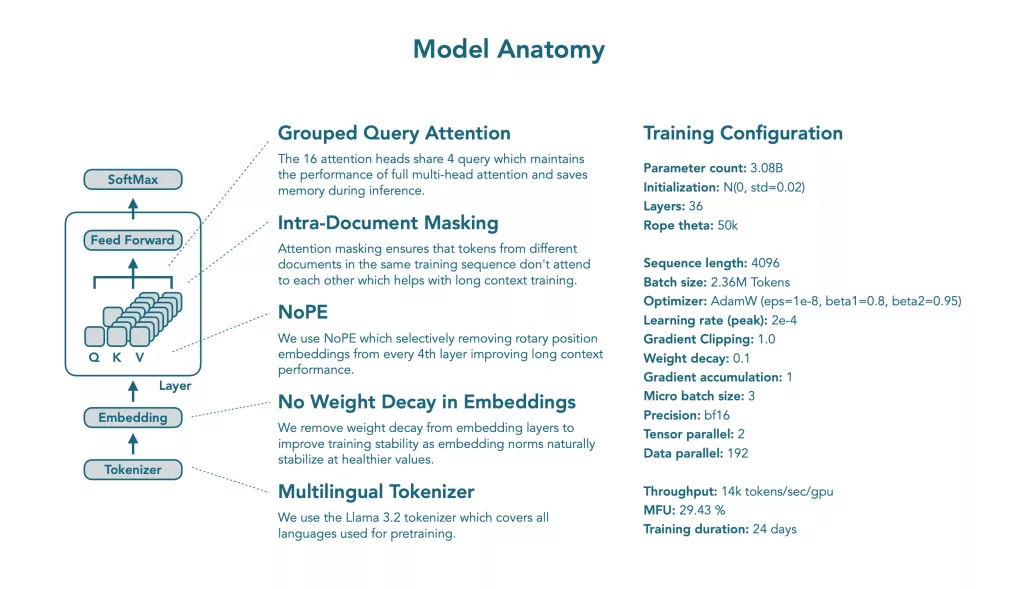

Advanced Architecture Design: SmolLM3 employs a decoder-only transformer architecture with Grouped Query Attention (GQA) and No Position Encoding (NoPE) in a 3:1 ratio. This design choice significantly reduces computational overhead while maintaining the model’s ability to understand and generate complex text patterns.

Staged Curriculum Training: The model was trained on 11.2 trillion tokens using a carefully designed curriculum that progresses through web content, code, mathematics, and reasoning data. This staged approach ensures the model develops robust capabilities across diverse domains while maintaining efficiency.

Hybrid Reasoning System: SmolLM3 introduces an innovative dual-mode reasoning system. Users can enable “extended thinking” mode where the model generates detailed reasoning traces before providing answers, similar to chain-of-thought prompting but built into the model’s core capabilities. This can be toggled with simple /think and /no_think flags.

Post-Training Optimization: After pretraining, SmolLM3 underwent midtraining on 140 billion reasoning tokens, followed by supervised fine-tuning and alignment via Anchored Preference Optimization (APO). This multi-stage refinement process ensures the model delivers high-quality, aligned outputs.

Benefits and Use Cases

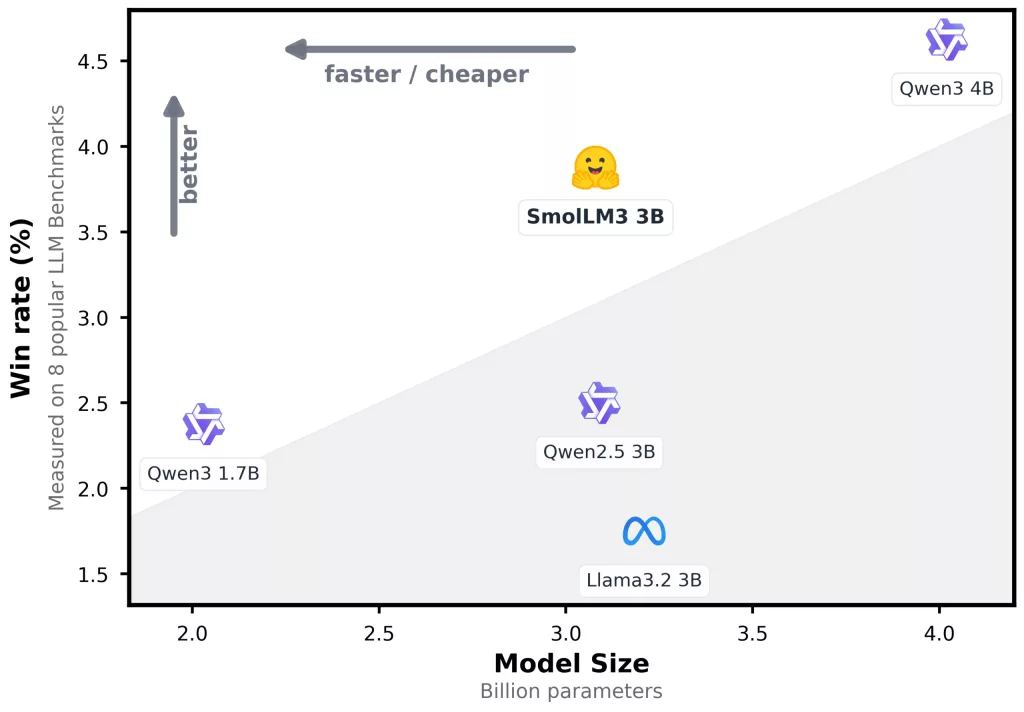

Superior Performance in Its Class: SmolLM3-3B consistently outperforms other models in the 3-4B parameter range across critical benchmarks. On MMLU (Massive Multitask Language Understanding), it achieves scores that rival models twice its size. Its coding capabilities are particularly impressive, with strong performance on HumanEval and other programming benchmarks, making it suitable for code generation and debugging tasks.

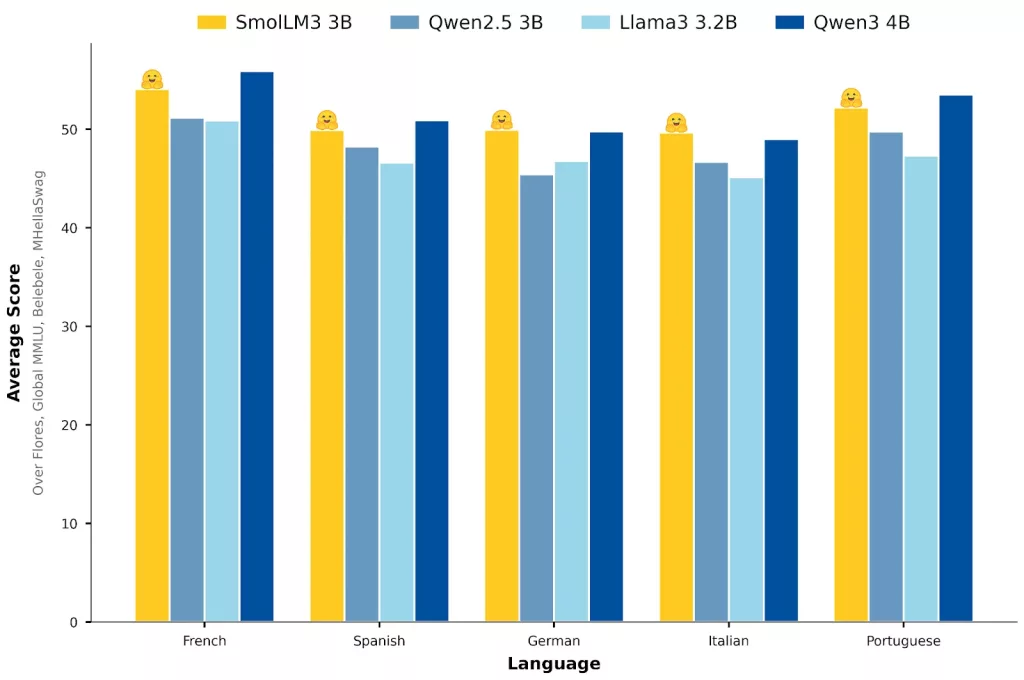

The model excels at mathematical reasoning, achieving remarkable scores on benchmarks like GSM8K and MATH, demonstrating that sophisticated reasoning doesn’t require massive scale. Its multilingual capabilities are equally impressive, with native support for 6 languages and strong performance on cross-lingual benchmarks like Belebele and Flores-200.

Efficiency and Accessibility: As a 3B parameter model, SmolLM3 can run on consumer-grade hardware, including:

- Single consumer GPUs (8GB+ VRAM)

- Apple Silicon devices

- Cloud instances with modest specifications

- This accessibility democratizes advanced AI capabilities for developers, researchers, and small organizations.

Key Questions & Answers

How does SmolLM3 compare to larger models like GPT-3.5 or Llama?

While SmolLM3 has fewer parameters, it often matches or exceeds the performance of much larger models on specific tasks, particularly in reasoning and coding. Its efficiency makes it ideal for applications where computational resources are limited, and its open-source nature allows for customization that closed models don’t permit.

What makes the “hybrid reasoning” special?

SmolLM3’s hybrid reasoning allows it to switch between fast, direct responses and detailed step-by-step thinking. This flexibility means you can get quick answers for simple queries or detailed reasoning chains for complex problems, all from the same model without additional prompting techniques.

Can SmolLM3 be fine-tuned for specific tasks?

Yes! Being fully open-source under Apache 2.0 license, SmolLM3 can be fine-tuned for specific domains or tasks. The model’s efficient size makes fine-tuning accessible even on limited hardware, and Hugging Face provides comprehensive documentation and tools for customization.

How does the long context feature work?

SmolLM3 is trained on 64K context length but can extend to 128K tokens using YARN (Yet Another RoPE Extension). This allows processing of long documents, extensive conversations, or large codebases without losing coherence or accuracy.

Conclusion

SmolLM3-3B represents a paradigm shift in language model development, proving that bigger isn’t always better. By combining innovative architecture, thoughtful training methodology, and a focus on efficiency, Hugging Face has created a model that democratizes access to advanced AI capabilities without compromising on performance.

Its open-source nature, combined with excellent performance across reasoning, coding, and multilingual tasks, makes SmolLM3 an ideal choice for developers looking to integrate sophisticated AI into their applications without the computational overhead or licensing restrictions of larger models. As the AI community continues to push the boundaries of what’s possible with efficient architectures, SmolLM3 stands as a testament to the power of thoughtful engineering over brute-force scaling.

Ready to get started? Visit the Hugging Face model page, explore the GitHub repository, or try it directly in your applications with just a few lines of code.

How to run SmolLM3 on Cordatus.ai ?

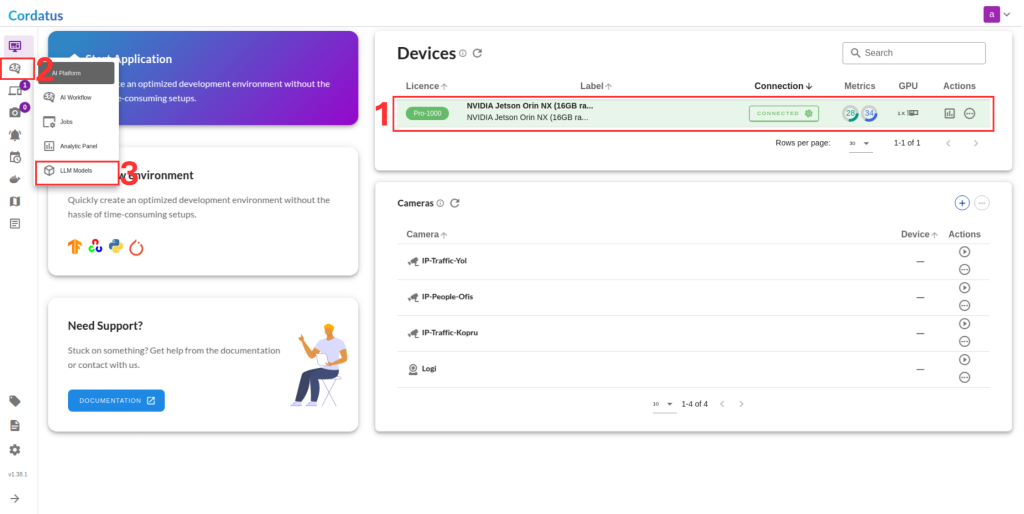

1. Connect to your device and select LLM Models from the sidebar.

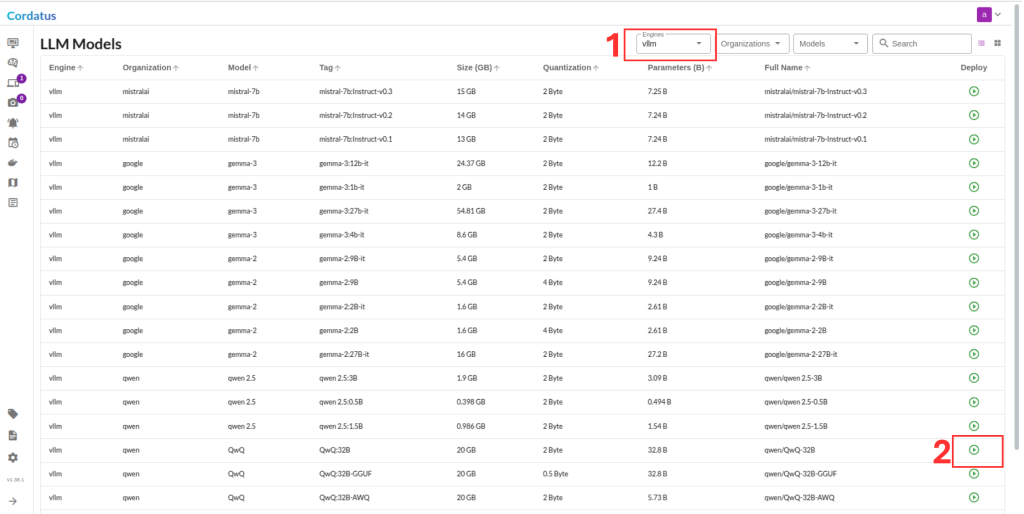

2. Select vLLM from the model selector menu (Box1), choose your desired model, and click the Run symbol (Box2).

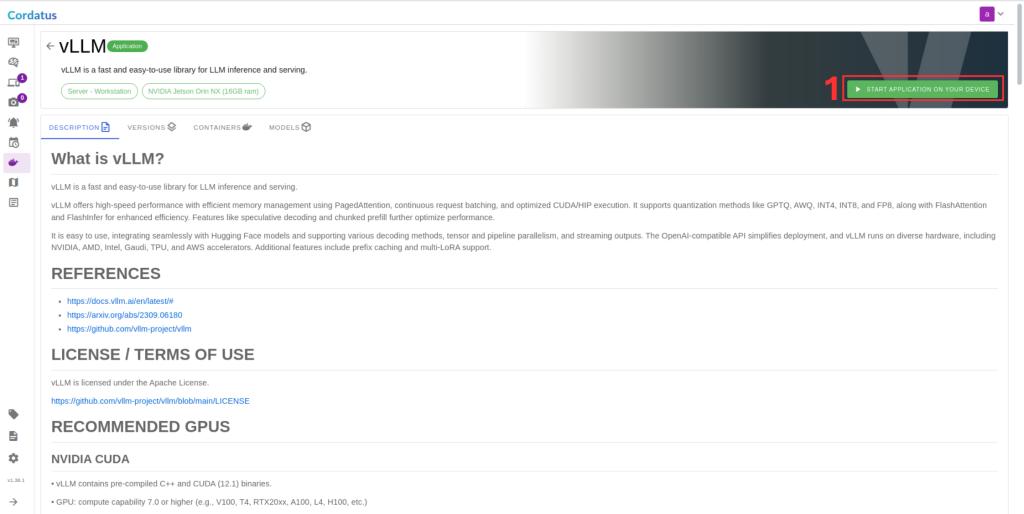

3. Click Run to start the model deployment.

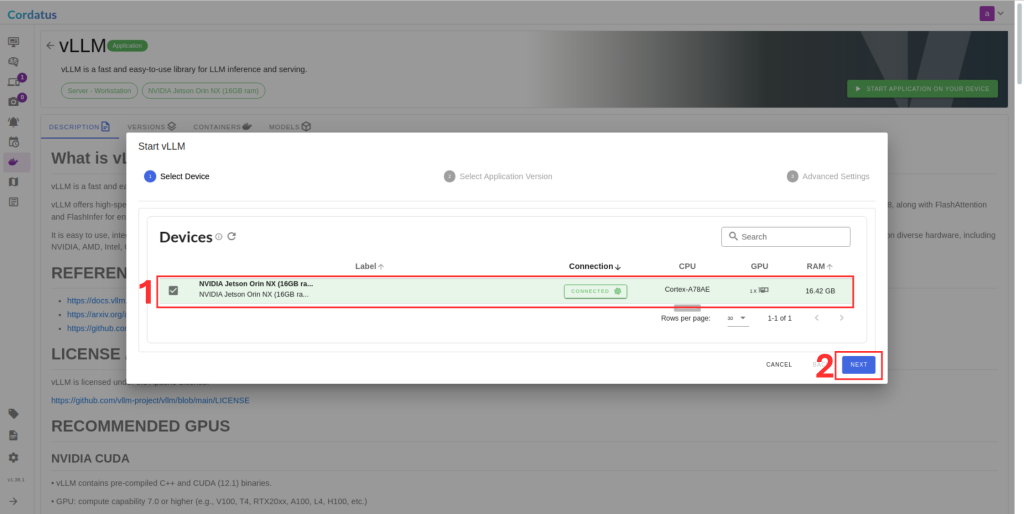

4. Select the target device where the LLM will run.

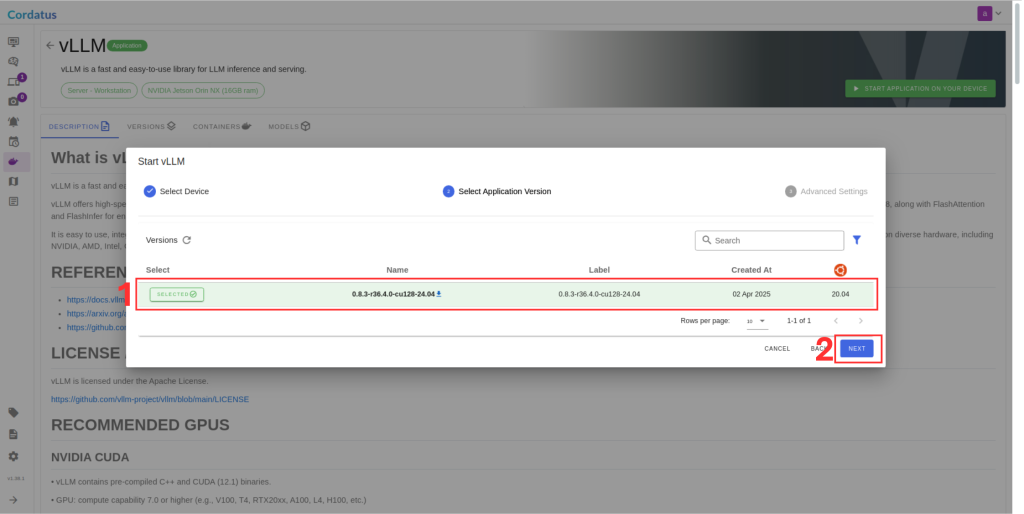

5. Choose the container version (if you have no idea select the latest).

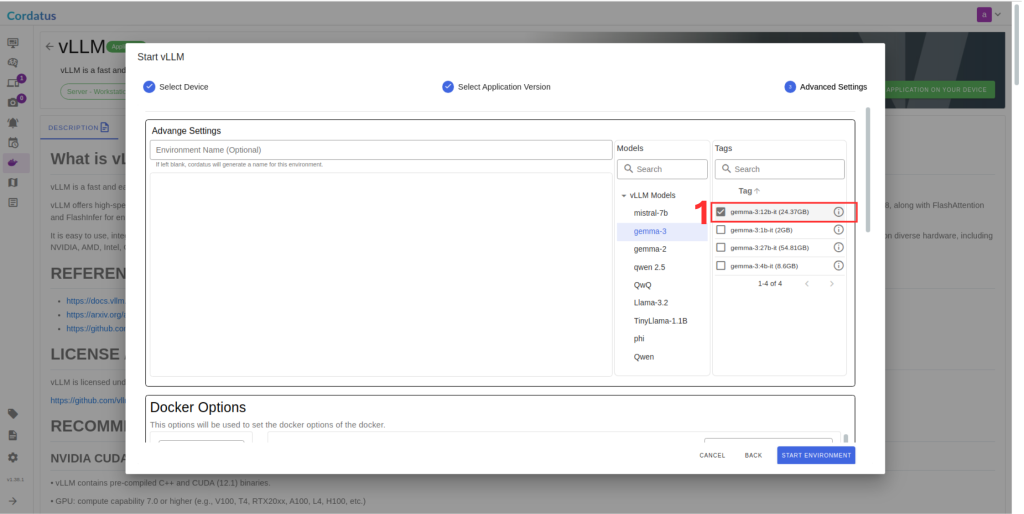

6. Ensure the correct model is selected in Box 1.

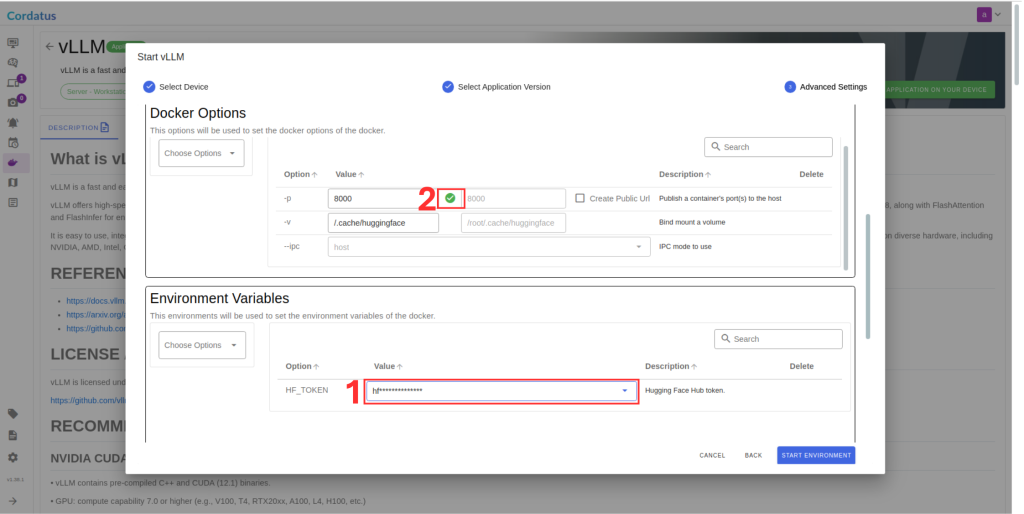

7. Set Hugging Face token in Box 1 if required by the model.

8. Click Save Environment to apply the settings.

Once these steps are completed, the model will run automatically, and you can access it through the assigned port.