DeepSeek-R1-0528-Qwen3-8B: Think Like a 235B Model

What is the DeepSeek-R1-0528-Qwen3-8B Model?

DeepSeek-R1-0528-Qwen3-8B is a state-of-the-art open-source language model that distills the advanced chain-of-thought (CoT) reasoning abilities of DeepSeek-R1-0528 into the compact yet powerful Qwen3 8B Base. This distillation process transfers high-level step-by-step thinking into a much smaller architecture, resulting in a highly optimized 8B model that excels in logical reasoning, instruction following, and math-based tasks.

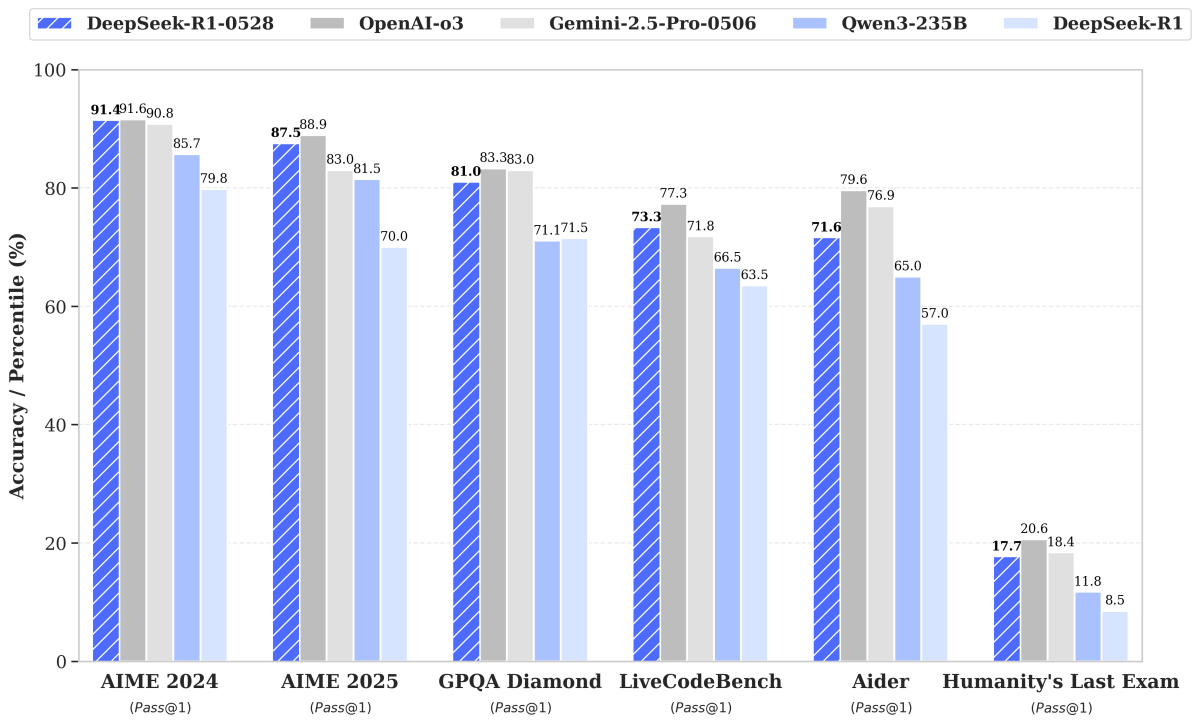

On the AIME 2024 benchmark, the model achieves state-of-the-art (SOTA) performance among all open-source models, surpassing the standard Qwen3 8B by an impressive +10.0% in accuracy.

What makes it truly remarkable is that it performs on par with Qwen3-235B-thinking, a model nearly 30 times larger. This positions DeepSeek-R1-0528-Qwen3-8B as an outstanding choice for developers and researchers who need premium reasoning power in a lightweight, cost-effective form—especially when large-scale hardware isn’t an option.

Benchmarks

What Makes DeepSeek-R1-0528-Qwen3-8B Stand Out? Why Should You Use It?

Distilled Chain-of-Thought Reasoning: Inherits and compresses advanced CoT techniques from DeepSeek-R1-0528 for superior step-by-step logic.

Benchmark Leader: Achieves best-in-class results on AIME 2024, beating larger models with far fewer parameters.

Compact Yet Competitive: Delivers high-end reasoning comparable to a 235B model—at just 8B size.

Efficient for Real Use: Ideal for research, math reasoning, education tech, and logic-intensive applications.

Open-Source Freedom: Available for community use, fine-tuning, and deployment in private or academic settings.

With DeepSeek-R1-0528-Qwen3-8B, you get elite reasoning quality in an efficient, open package—perfect for serious thinkers on a budget.